Table of Contents

OpenMetadata vs DataHub: A Practical Comparison for Data Teams

OpenMetadata and DataHub are leading open-source metadata platforms, but they serve different organizational needs. This blog compares their architectures, governance models, and operational trade-offs beyond surface-level features. It explains when governance-first teams benefit from OpenMetadata and when engineering-led platforms favor DataHub. The guide also highlights how enterprise metadata platforms like OvalEdge support teams as metadata needs scale.

As data teams scale, metadata stops being a background concern and becomes a daily blocker. Tables multiply, pipelines change frequently, dashboards depend on assets no one fully understands, and trust in data starts to erode.

At that point, spreadsheets and tribal knowledge fail, and teams turn to open-source metadata platforms to regain visibility and control.

This is where the openmetadata vs datahub decision comes into focus. Both tools promise better data discovery, lineage, and governance, but they take fundamentally different paths to get there. One emphasizes unified metadata, usability, and governance workflows. The other is built for real-time metadata automation and large-scale, event-driven data platforms.

The urgency is real.

Gartner Insights on Data Quality estimates that poor data quality costs organizations an average of 15% of revenue annually, and metadata gaps are a major contributor to that loss.

As data ecosystems become more distributed, choosing the wrong metadata foundation can slow adoption, increase operational overhead, and weaken governance outcomes.

In this blog, we will walk through how OpenMetadata and DataHub differ in architecture, features, and operational trade-offs. You will learn which platform fits governance-led teams, which suits platform engineering organizations, and how to make a confident, future-ready choice.

What is OpenMetadata?

OpenMetadata is an open-source metadata platform designed to help data teams build a single, reliable source of truth for their data assets. It brings together technical metadata, business context, lineage, and governance in one place, making data easier to discover, understand, and trust as organizations scale.

|

Market Recognition: OvalEdge was recognized in the SPARK Matrix™ for Data Governance Solutions and listed as an overall leader in data catalogs and metadata management, illustrating its industry credibility. |

Overview of OpenMetadata and its design philosophy

OpenMetadata is built on the idea that metadata should be unified, relational, and accessible beyond engineering teams. Instead of treating datasets and dashboards as isolated objects, it models metadata as connected entities with clearly defined relationships. This approach makes data flows and dependencies easier to understand across the organization.

Its design philosophy centers on:

-

A centralized, relational metadata model that connects datasets, dashboards, pipelines, and glossary terms

-

Strong emphasis on usability for analysts, data scientists, and business users

-

Governance and stewardship are built into the core platform rather than added later

Key features and capabilities of OpenMetadata

OpenMetadata focuses on making metadata actionable in day-to-day workflows, not just stored for reference. It supports:

-

Centralized data discovery across tables, dashboards, and pipelines

-

End-to-end lineage visibility for analytics and data engineering workflows

-

Built-in ownership, certification, and documentation workflows

-

Business glossary and taxonomy management to align technical and business definitions

-

Collaboration features such as comments, tasks, and announcements

Metadata model, governance support, and extensibility

At its core, OpenMetadata uses a relational metadata model with clearly defined entities and relationships, which simplifies governance at scale. Governance features are designed to support accountability and trust without adding unnecessary complexity.

Key strengths include:

-

Certification badges, sensitivity tags, and ownership rules for consistent governance

-

Workflow-driven stewardship to ensure accountability over time

-

Extensibility through APIs and custom metadata fields

-

Easier customization without heavy platform engineering effort

|

Also read: Metadata Management Best Practices: A Complete 2026 Guide |

What is DataHub?

DataHub is an open-source metadata platform designed for organizations that need metadata to stay continuously in sync with fast-changing data systems. It treats metadata as a living system, updating it in near real time as pipelines, schemas, and data assets evolve.

Overview of DataHub and its architectural approach

DataHub is built around an event-driven, platform-centric architecture that prioritizes automation and scale. Instead of relying on scheduled scans or batch updates, it captures metadata changes as events and propagates them across the platform. This makes it a strong fit for modern data stacks with frequent deployments and high change velocity.

At a high level, DataHub is designed for:

-

Event-driven metadata ingestion rather than periodic batch updates

-

Large-scale, distributed data environments

-

Platform and infrastructure teams managing metadata centrally

-

Continuous alignment between metadata and production systems

Key features and capabilities of DataHub

DataHub focuses heavily on automated metadata ingestion and operational visibility. It continuously collects metadata from data warehouses, transformation tools, orchestration systems, and BI platforms to keep the catalog up to date.

Core capabilities include:

-

Automated ingestion of technical, operational, and usage metadata from multiple systems

-

Pipeline and transformation lineage to track how data moves and changes

-

Domain-based ownership models to organize metadata at scale

-

Freshness, usage, and health signals to monitor data reliability

-

Integration with orchestration and observability tools for operational context

These features make DataHub particularly strong in environments where metadata accuracy depends on staying closely aligned with production workflows.

Event-driven metadata model and platform extensibility

DataHub’s metadata model is graph-based and event-driven, allowing relationships between datasets, pipelines, dashboards, and users to be updated as changes occur.

This approach supports advanced dependency tracking and impact analysis across complex data ecosystems.

Key characteristics of this model include:

-

Metadata changes are propagated through events instead of scheduled jobs

-

Near real-time updates for lineage and schema changes

-

Graph-based relationships for upstream and downstream impact analysis

-

High extensibility for custom ingestion pipelines and internal workflows

This design gives teams powerful automation and scalability, but it also assumes comfort with managing streaming infrastructure and ongoing operational complexity.

OpenMetadata vs DataHub: High-level comparison

When teams evaluate OpenMetadata and DataHub, the decision usually comes down to priorities rather than features. Both platforms address metadata challenges, but they are designed for different operating models and ownership structures.

Primary goals and target users

OpenMetadata is commonly chosen by teams that prioritize usability, governance, and broad adoption. It fits well when analytics and governance teams lead metadata initiatives and need clarity, trust, and accountability built into daily workflows.

OpenMetadata is often preferred when:

-

Governance, documentation, and trust signals are critical

-

Analysts and business users need strong discovery and usability

-

Ownership and accountability must be clearly enforced

DataHub is built for engineering-led environments where metadata is treated as part of the data platform and updated continuously alongside production systems.

DataHub is often preferred when:

-

Platform or infrastructure teams own metadata systems

-

Automation and continuous metadata updates are required

-

Metadata must stay tightly aligned with production pipelines

Open-source maturity and community adoption

Both platforms have active open-source communities, but they focus on different areas. OpenMetadata’s community emphasizes governance workflows and ease of adoption, while DataHub’s community focuses on scalability, ingestion frameworks, and event-driven infrastructure.

Enterprise readiness for either platform depends largely on a team’s operational maturity and ability to manage open-source systems at scale.

When teams typically shortlist OpenMetadata or DataHub

Teams usually shortlist OpenMetadata when governance, documentation, and discoverability are immediate needs. DataHub is more often shortlisted when real-time metadata updates and large-scale automation are top priorities, especially in event-driven data platforms.

While both platforms solve metadata challenges, their strengths differ. The table below highlights where each one stands.

|

Dimension |

OpenMetadata |

DataHub |

|

Primary focus |

Governance, usability, and metadata clarity |

Automation, scalability, and platform engineering |

|

Metadata model |

Centralized, relational entity model |

Event-driven, graph-based model |

|

Target users |

Analytics, governance, and data product teams |

Platform engineering and infrastructure teams |

|

Metadata updates |

Periodic and workflow-driven |

Near real-time via events |

|

Governance support |

Built-in ownership, certification, and stewardship |

Extensible, often requires custom workflows |

|

Ease of adoption |

Faster onboarding for mixed technical and business teams |

Steeper learning curve due to infrastructure complexity |

|

Operational complexity |

Lower infrastructure and maintenance overhead |

Higher due to Kafka and event-driven systems |

|

Customization approach |

APIs and configuration-driven extensibility |

Deep extensibility through engineering effort |

|

Best suited for |

Governance-first and analytics-heavy environments |

Large-scale, automation-heavy data platforms |

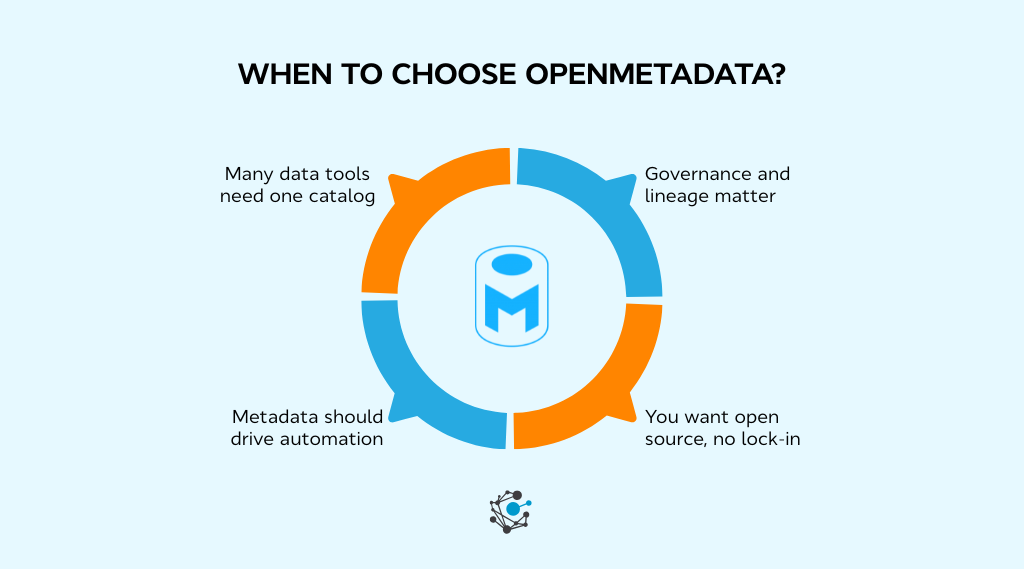

When to choose OpenMetadata?

After comparing OpenMetadata and DataHub at a high level, the next step is understanding when OpenMetadata is the better fit. This usually comes down to how much emphasis your organization places on governance, usability, and ease of adoption.

Preference for a unified and relational metadata model

OpenMetadata works well for teams that want a single, consistent view of metadata across datasets, dashboards, pipelines, and glossary terms. Its relational model makes it easier to understand upstream and downstream relationships without navigating complex graphs.

This approach is especially valuable when:

-

Business users need clear visibility into data ownership and definitions

-

Teams rely heavily on documentation and discoverability

-

Lineage needs to be understandable beyond engineering teams

Simpler deployment and operational setup needs

Organizations with lean platform teams often prefer OpenMetadata because it is easier to deploy and operate. It does not require managing complex event-streaming infrastructure, which reduces setup time and ongoing maintenance.

OpenMetadata is a strong fit when:

-

Faster implementation and onboarding are priorities

-

Infrastructure and operational overhead must be kept low

-

Platform teams are small or already stretched thin

Governance-first approach to metadata management

OpenMetadata is designed with governance and stewardship at its core. Ownership, certification, and accountability are built directly into the platform, making governance part of everyday data workflows rather than a separate process.

It is well-suited for environments where:

-

Data stewardship and accountability are clearly defined

-

Compliance and certification requirements are growing

-

Analytics teams need trust signals embedded into how they use data

This makes OpenMetadata a practical choice for organizations that want governance and usability to lead their metadata strategy.

|

According to a Forrester Total Economic Impact™ (TEI) study commissioned by OvalEdge, organizations that invested in metadata management and data governance achieved a 337% return on investment, with benefits driven by improved data discovery, reduced time spent searching for data, stronger governance controls, and better decision-making efficiency. |

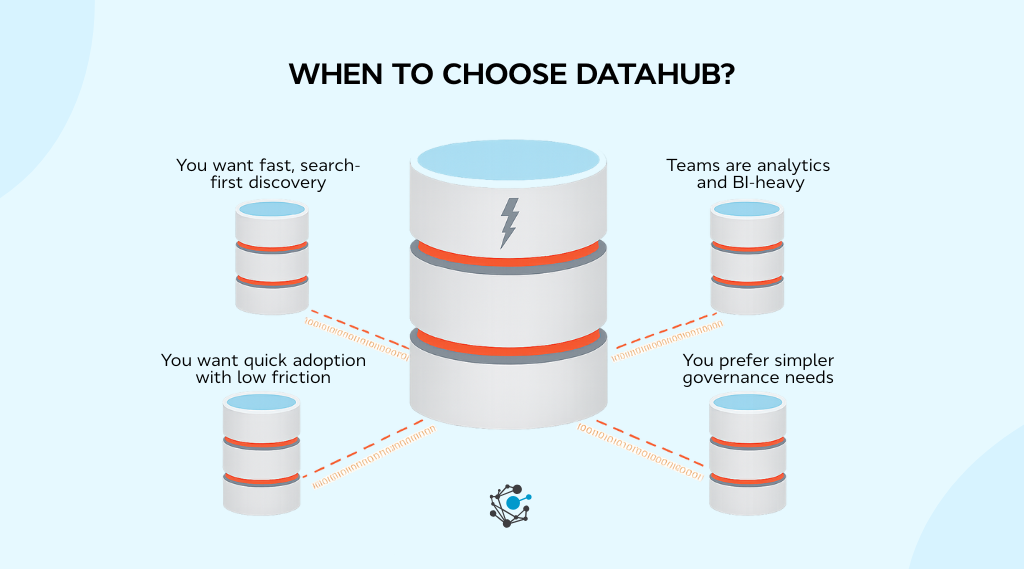

When to choose DataHub?

DataHub is a strong choice when metadata needs to operate at the same pace as your production data systems. It is best suited for organizations that prioritize automation, scale, and real-time alignment between metadata and data workflows.

Requirement for real-time or near-real-time metadata updates

DataHub is designed for environments where schemas, pipelines, and dependencies change frequently. Its event-driven architecture ensures metadata stays up to date as changes occur, reducing lag between production updates and metadata visibility.

DataHub is a good fit when:

-

Pipelines and schemas evolve rapidly

-

Impact analysis must reflect changes immediately

-

Metadata accuracy depends on continuous updates

Alignment with Kafka-based or event-driven ecosystems

Organizations already running event-driven architectures often find DataHub easier to adopt. Since metadata changes are propagated through events, teams with existing streaming infrastructure can integrate DataHub naturally into their platforms.

This works well when:

-

Kafka or similar streaming systems are already in place

-

Teams are comfortable operating event-driven pipelines

-

Metadata automation is a core platform requirement

Platform engineering ownership and scale considerations

DataHub is typically owned by platform or infrastructure teams that manage shared data services. Its flexibility and extensibility support large-scale deployments across many domains, but they also require strong engineering ownership.

DataHub is often chosen when:

-

Dedicated platform teams manage data infrastructure

-

Deep customization and internal tooling are required

-

Metadata must scale across many teams and assets over time

In short, DataHub is best suited for organizations with the engineering maturity to support an event-driven metadata platform in exchange for real-time visibility and high scalability.

How to evaluate OpenMetadata?

Once OpenMetadata is on your shortlist, the real work begins. Evaluating it properly means looking beyond surface features and understanding how well it will support governance, discovery, and long-term adoption as your data ecosystem grows.

Architecture and metadata model comparison

Start by examining how the platform models metadata and relationships. OpenMetadata uses an entity-first, relational model that connects datasets, dashboards, pipelines, and glossary terms in a structured way. This directly impacts how easy it is to understand lineage, ownership, and dependencies.

Key aspects to assess:

-

How clearly upstream and downstream relationships are represented

-

Whether the model supports governance-heavy use cases

-

How easily can business context connect to technical assets

A strong relational model is especially important for organizations that want a single source of truth for metadata.

Comparing lineage capabilities, data discovery, and metadata depth

Lineage and discovery are core to everyday usability. Evaluate how deeply OpenMetadata captures lineage and how intuitive it is for non-engineering users to interpret it.

Focus your evaluation on:

-

Lineage depth, including table-level and column-level visibility

-

BI and dashboard lineage accuracy

-

Search relevance and ranking of trusted assets

-

Metadata completeness indicators, such as owners, descriptions, and usage

The goal is not just lineage availability, but whether teams can confidently act on it.

Integration with analytics, BI, and orchestration tools

OpenMetadata’s value increases significantly when it integrates cleanly with your existing stack. Review how well it connects with your data warehouses, BI tools, and orchestration systems.

Important considerations include:

-

Compatibility with modern warehouses and lakehouses

-

Accuracy of BI lineage and dashboard dependencies

-

Visibility into pipelines and transformations

-

Alignment with identity and access management systems

Strong integrations reduce manual work and improve trust across teams.

Scalability and long-term maintenance considerations

Finally, assess how OpenMetadata will scale operationally and organizationally. Metadata volume, number of users, and governance complexity all increase over time.

Evaluate:

-

How permissions and ownership scale across domains

-

Metadata performance as asset counts grow

-

Ongoing stewardship effort required to keep metadata accurate

-

Sustainability of governance workflows in the long run

A successful OpenMetadata implementation is one that remains usable, trusted, and maintainable as your data landscape continues to expand.

How to evaluate DataHub?

Evaluating DataHub requires a slightly different lens than governance-first tools. Because DataHub is designed for automation, scale, and real-time metadata, the evaluation should focus on architecture fit, operational readiness, and long-term platform sustainability.

Architecture and metadata model comparison

DataHub is built on an event-driven, graph-based metadata architecture. This design determines how metadata changes propagate and how relationships are modeled across assets.

When evaluating the architecture, assess:

-

How metadata events flow from ingestion to storage and consumers

-

Whether the graph model supports complex dependency and impact analysis

-

How well the architecture aligns with your existing data platform design

This model is especially effective for automation-heavy environments, but it assumes teams are comfortable operating distributed systems.

|

Do you know: Several well-known companies like Netflix use DataHub in production to improve metadata visibility, discovery, and governance across their organizations. |

Comparing lineage capabilities, data discovery, and metadata depth

DataHub excels in technical and operational lineage, particularly for pipelines and transformations. Evaluation should focus on how complete and timely this lineage is across your stack.

Key areas to review include:

-

Coverage of pipeline, transformation, and schema lineage

-

The speed at which lineage updates reflect production changes

-

Ability to traverse the metadata graph for impact analysis

-

Availability of operational signals such as usage, freshness, and health

The strength of DataHub lies in metadata depth and accuracy, especially in fast-changing environments.

Upgrade, scaling, and maintenance effort

Operational effort is a critical factor when adopting DataHub. Its reliance on event streaming and multiple services introduces complexity that must be planned for.

During evaluation, consider:

-

Version upgrade processes and backward compatibility

-

Performance tuning as metadata volume grows

-

Monitoring and reliability of ingestion pipelines

-

Long-term engineering effort is required to keep the platform stable

Teams without dedicated platform ownership may find this overhead challenging.

Integration with analytics, BI, and orchestration tools

DataHub integrates deeply with modern data systems to keep metadata aligned with production workflows. Review how well it connects to your existing tools and how much customization is required.

Important integration points include:

-

Data warehouses and transformation tools for schema and dependency tracking

-

BI tools for dashboard-to-dataset lineage and impact analysis

-

Orchestration systems for pipeline context and execution metadata

-

Event-driven ingestion to keep metadata continuously in sync

A strong evaluation confirms not just integration availability, but how reliably those integrations operate at scale.

Taken together, DataHub is best evaluated as a metadata platform component rather than a standalone catalog. Success depends on architectural alignment, operational maturity, and a clear ownership model within the organization.

Open-source vs. OvalEdge: When to choose an enterprise metadata platform

Choosing between open-source metadata tools and an enterprise platform like OvalEdge depends on how mature your metadata practices are and how much operational responsibility your teams can realistically support.

Open-source platforms such as OpenMetadata and DataHub provide strong foundational capabilities for discovery, lineage, and metadata collection. They work well when teams have the engineering capacity to manage infrastructure, integrations, upgrades, and governance workflows internally.

As metadata usage expands across domains and business functions, many organizations find that stitching together open-source components becomes harder to sustain. Governance rules drift, ownership becomes inconsistent, and maintaining metadata quality requires increasing manual effort.

An enterprise metadata platform like OvalEdge brings these capabilities together into a unified, production-ready system. It combines automated discovery, lineage, governance, and metadata intelligence under a single control layer, reducing the need for custom scripts and ongoing platform maintenance.

This allows data teams to scale metadata adoption, governance, and trust without slowing down analytics or platform velocity.

Conclusion

Choosing between OpenMetadata and DataHub comes down to how your organization operates its data ecosystem. OpenMetadata suits teams that prioritize governance, usability, and faster adoption across analytics and business users.

DataHub fits engineering-led environments where automation, real-time metadata updates, and tight alignment with production systems matter most. Each platform addresses metadata challenges from a different angle, and the right choice depends on ownership models, scale, and operational maturity.

As data estates grow, many organizations discover that maintaining metadata quality, governance consistency, and platform reliability becomes harder with open-source tools alone. Managing infrastructure, upgrades, integrations, and stewardship workflows can pull focus away from analytics and decision-making.

This is where enterprise metadata platforms like OvalEdge come into the picture. OvalEdge builds on the foundations of metadata discovery and lineage by adding centralized governance, automation, and operational reliability designed for scale.

Book a demo with OvalEdge and see how unified metadata can power trusted, governed data across your enterprise.

FAQs

1. Is OpenMetadata better than DataHub for non-engineering teams?

OpenMetadata can be easier for mixed technical and business teams due to its UI-first approach. DataHub typically suits engineering-led organizations comfortable managing event-driven systems and infrastructure-heavy deployments.

2. Does DataHub require Kafka to run in production?

Yes, DataHub relies on Kafka for its event-driven metadata architecture. Teams must plan for Kafka setup, monitoring, and scaling, which increases operational complexity compared to simpler deployment models.

3. Can OpenMetadata and DataHub handle enterprise-scale metadata volumes?

Both platforms scale well, but in different ways. DataHub excels in high-throughput, real-time metadata environments, while OpenMetadata focuses on structured growth with unified metadata modeling and governance controls.

4. Which tool is easier to customize for governance workflows?

OpenMetadata offers more built-in governance constructs like domains, ownership, and policies. DataHub supports customization through extensions but often requires deeper engineering effort to implement governance-specific workflows.

5. Is it possible to migrate from DataHub to OpenMetadata or vice versa?

Migration is possible but non-trivial. Teams must map metadata models, lineage structures, and ingestion logic carefully, often requiring custom scripts or intermediary storage to avoid metadata loss.

6. Are OpenMetadata and DataHub suitable replacements for commercial data catalogs?

They can replace commercial tools for teams with strong engineering support. Organizations seeking faster time-to-value, managed operations, or broader governance coverage may still prefer enterprise-grade metadata platforms.

Deep-dive whitepapers on modern data governance and agentic analytics

OvalEdge Recognized as a Leader in Data Governance Solutions

.png?width=1081&height=173&name=Forrester%201%20(1).png)

“Reference customers have repeatedly mentioned the great customer service they receive along with the support for their custom requirements, facilitating time to value. OvalEdge fits well with organizations prioritizing business user empowerment within their data governance strategy.”

.png?width=1081&height=241&name=KC%20-%20Logo%201%20(1).png)

“Reference customers have repeatedly mentioned the great customer service they receive along with the support for their custom requirements, facilitating time to value. OvalEdge fits well with organizations prioritizing business user empowerment within their data governance strategy.”

Gartner, Magic Quadrant for Data and Analytics Governance Platforms, January 2025

Gartner does not endorse any vendor, product or service depicted in its research publications, and does not advise technology users to select only those vendors with the highest ratings or other designation. Gartner research publications consist of the opinions of Gartner’s research organization and should not be construed as statements of fact. Gartner disclaims all warranties, expressed or implied, with respect to this research, including any warranties of merchantability or fitness for a particular purpose.

GARTNER and MAGIC QUADRANT are registered trademarks of Gartner, Inc. and/or its affiliates in the U.S. and internationally and are used herein with permission. All rights reserved.

-1.png)