Table of Contents

Data Quality Management Framework: How to Build Reliable Data Systems

Data quality management is an essential element of data governance. However, to undertake it effectively, you need a dedicated tool. In this article, we explain what data quality management is and how to implement your data quality improvement strategy.

Imagine being a pilot whose navigation system is fed with inaccurate data. You might fly in the wrong direction or worse, crash. Similarly, poor data quality leads to incorrect conclusions, poor decision-making, and lost opportunities.

According to IBM, poor data quality costs the U.S. economy around $3.1 trillion annually. Data scientists spend most of their time cleaning datasets instead of analyzing them. And when data is cleaned later in the pipeline, it introduces assumptions that can distort outcomes.

The best practice? Address data quality at the source, not downstream.

Data Quality Vs. Data Cleansing

Although they’re often used interchangeably, data quality and data cleansing are not the same.

- Data cleansing focuses on identifying and correcting errors or inconsistencies.

- Data quality measures the overall fitness of data for its intended use.

A strong data quality improvement strategy aims to minimize the need for data cleansing altogether by preventing errors at the point of data generation.

When assessing your organization’s data, consider the following key dimensions:

- Accuracy

- Completeness

- Consistency

- Validity

- Timeliness

- Uniqueness

- Integrity

Regular profiling and validation against these dimensions help track improvement over time.

Read more: What is Data Quality? Dimensions & Their Measurement

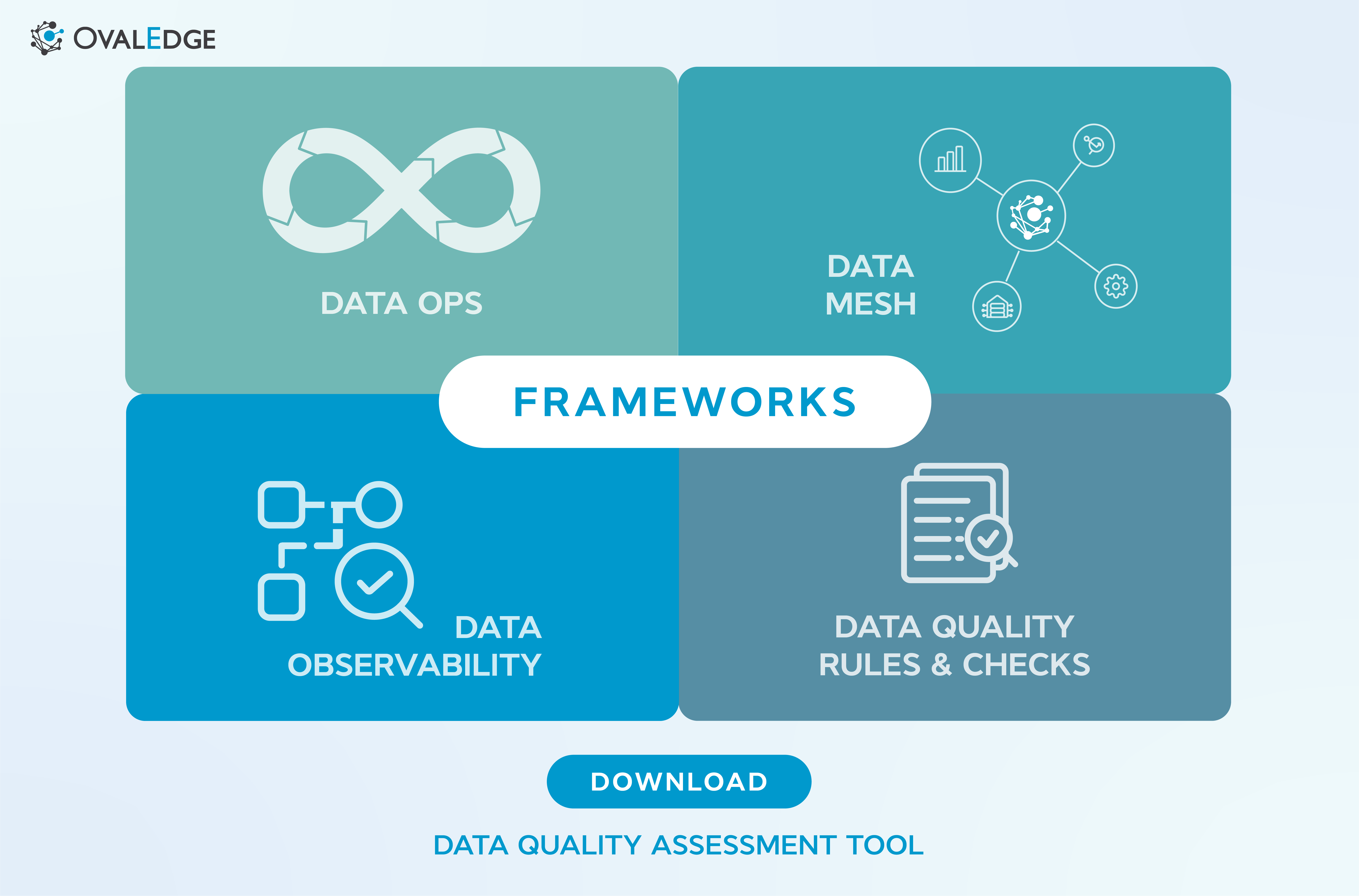

In this blog, we’ll discuss various methodologies, frameworks, and tools that exist to improve data quality.

Click the image above to download the Data Quality Assessment Tool.

Scope of Data Quality Management

Before improving data quality, it’s crucial to understand where it can go wrong. A comprehensive data quality framework considers three key phases:

1. Data Generation

Data originates from multiple sources CRMs, ERPs, sensors, servers, and marketing platforms. Data from systems like ERP or CRM tends to be more critical. The goal is to ensure high-quality data at the source, supporting decision-making and operational efficiency.

2. Data Movement

Data moves between applications for business processes and reporting. Common movement methods include:

- Integrations: Through APIs or transactions

- Data pipelines: Bulk transfers between systems

- Manual processes: Manual entry or uploads

Without proper controls, integrations can move poor-quality data downstream.

3. Data Consumption

Once analyzed and consumed, data fuels marketing, forecasting, and analytics. If inaccurate or incomplete, it leads to poor insights and lost business opportunities. Effective data quality management tools ensure clean, validated data reaches the point of consumption.

Why does data quality suffer?

Even with good intentions, several systemic issues cause data quality degradation:

1. Weak System and Business Controls

Applications often lack validation checks. For instance, an email field may only verify format, not validity. Business-level validation (e.g., sending a confirmation email) ensures true accuracy.

2. Inconsistent Data Capture by Machines

Sensors and systems often collect data inconsistently due to missing definitions or a lack of customizable controls.

3. Integrations Without Quality Checks

Most integrations prioritize data availability, not accuracy. As a result, bad data moves seamlessly between systems.

4. Fragile Data Pipelines

Pipelines often fail due to inconsistent schema design. Example: when a source field (120 characters) exceeds the target field limit (100), it causes breaks or truncation.

5. Duplicated Business Processes

When marketing, sales, and finance manage separate customer datasets, duplication and inconsistency become inevitable.

Understanding these causes helps define a targeted data quality improvement strategy tailored to your organization’s needs.

Different frameworks to manage data quality

Data quality is a multi-dimensional challenge, and no single framework can solve it all. Let’s explore some leading data quality management frameworks and approaches used by modern enterprises.

1. DataOps

DataOps applies DevOps principles to data management, improving collaboration and automation across teams. It streamlines the entire data lifecycle from collection and storage to analysis and reporting, ensuring efficiency and traceability.

2. Data Observability

This approach tracks data pipeline performance and system health. By analyzing operational metrics, data observability helps detect anomalies early and maintain reliability across systems.

3. Data Mesh

A Data Mesh decentralizes data ownership across teams, empowering them to manage their domains independently. It promotes autonomy while maintaining consistency through standardized governance policies.

4. Data Quality Rules and Checks

Automated data quality checks monitor accuracy, completeness, and consistency. They flag missing values, duplicates, or incorrect formats, allowing teams to take corrective action before problems spread.

No single approach fits all. The most effective data quality management framework combines multiple methodologies to meet unique organizational needs.

Why is data governance a must?

Despite the differences in focus, one common thing among all the frameworks mentioned above is the need for a unified governance structure. This structure ensures data is used and managed consistently and competently across all teams and departments.

A federated governance or unified governance approach can help ensure data quality, security, and compliance while allowing teams to work independently and autonomously.

As we mentioned at the start, data quality is one of the core outcomes of a data governance initiative. As a result, a key concern for data governance teams, groups, and departments is improving the overall data quality. But there is a problem: coordination.

The data governance and quality team may not have the magic wand to fix all problems. Still, they are the wizards behind the curtain, guiding various teams to work together, prioritize, and conduct a thorough root cause analysis. A common-sense framework they use is the Data Quality Improvement Lifecycle (DQIL).

To ensure the DQIL's success, a tool must be in place and include all teams in the process to measure and communicate their efforts back to management. Generally, this platform is combined with the Data Catalog, workflows, and collaboration to bring everyone together so that Data Quality efforts can be measured.

Data Quality Improvement Lifecycle (DQIL)

The Data Quality Improvement Lifecycle (DQIL) is a framework of predefined steps that detect and prioritize data quality issues, enhance data quality using specific processes, and route errors back to users and leadership so the same problems don’t arise again in the future.

The steps of the data quality improvement lifecycle are:

- Identify: Define and collect data quality issues, where they occur, and their business value.

- Prioritize: Prioritize data quality issues by their value and business impact.

- Analyze for Root Cause: Perform a root cause analysis on the data quality issue.

- Improve: Fix the data quality issue through ETL fixes, process changes, master data management, or manually.

- Control: Use data quality rules to prevent future issues.

Overall data quality improves as issues are continuously identified and controls are created and implemented.

Learn more about Best Practices for Improving Data Quality with the Data Quality Improvement Lifecycle

Why You Need the Right Data Quality Management Tools

Implementing a data quality framework at scale requires robust tooling to automate validation, enforce rules, and track performance.

A good data quality management tool should:

- Automate profiling, validation, and issue detection.

- Integrate seamlessly with your data catalog or warehouse.

- Support collaboration and workflow automation.

- Offer dashboards for tracking quality metrics.

At OvalEdge, we bring all these capabilities into one unified platform, enabling organizations to catalog, govern, and improve data quality from source to consumption.

Our platform supports DQIL-based workflows, automated data quality rules, and real-time monitoring for continuous improvement.

Wrap up

So, you now know where and why bad quality data can occur, emerging frameworks to manage data quality, and the data quality improvement lifecycle to manage all the data quality problems.

But one important thing is to socialize the data quality work inside the company so that everyone knows how we are improving the data quality.

At OvalEdge, we can do this by housing a data catalog and managing data quality in our unified platform. OvalEdge has many features to tackle data quality challenges and implement best practices. The data quality improvement lifecycle can be wholly managed through OvalEdge, capturing the context of why a data quality issue occurred.

Data quality rules monitor data objects and notify the correct person if a problem arises. You can communicate to your users which assets have active data quality issues while the team works to resolve them.

Track your organization’s data quality based on the number of issues found and their business impact. This information can be presented in a report to track monthly improvements to your data’s quality.

With OvalEdge, we can help you achieve data quality at the source level, following best practices, but if any downstream challenges exist, our technology can help you address and overcome them.

FAQs

- What is a data quality management framework?

It’s a structured approach that defines processes, tools, and roles to ensure data accuracy, completeness, and reliability throughout its lifecycle. -

What are common challenges in managing data quality?

Inconsistent capture, lack of validation controls, duplicate records, and siloed processes are the biggest hurdles organizations face. -

How does data governance support data quality management?

Data governance defines standards, ownership, and accountability ensuring all teams maintain consistent data quality practices. - What tools are used for data quality management?

Tools like OvalEdge, Talend, and Informatica help automate validation, profiling, and monitoring, forming the backbone of any robust data quality improvement strategy.

Deep-dive whitepapers on modern data governance and agentic analytics

OvalEdge recognized as a leader in data governance solutions

.png?width=1081&height=173&name=Forrester%201%20(1).png)

“Reference customers have repeatedly mentioned the great customer service they receive along with the support for their custom requirements, facilitating time to value. OvalEdge fits well with organizations prioritizing business user empowerment within their data governance strategy.”

.png?width=1081&height=241&name=KC%20-%20Logo%201%20(1).png)

“Reference customers have repeatedly mentioned the great customer service they receive along with the support for their custom requirements, facilitating time to value. OvalEdge fits well with organizations prioritizing business user empowerment within their data governance strategy.”

Gartner, Magic Quadrant for Data and Analytics Governance Platforms, January 2025

Gartner does not endorse any vendor, product or service depicted in its research publications, and does not advise technology users to select only those vendors with the highest ratings or other designation. Gartner research publications consist of the opinions of Gartner’s research organization and should not be construed as statements of fact. Gartner disclaims all warranties, expressed or implied, with respect to this research, including any warranties of merchantability or fitness for a particular purpose.

GARTNER and MAGIC QUADRANT are registered trademarks of Gartner, Inc. and/or its affiliates in the U.S. and internationally and are used herein with permission. All rights reserved.

.png?width=629&height=398&name=unnamed%20(4).png)