Table of Contents

Data Lifecycle Management: What It Is, How It Works & Why It Matters

Data Lifecycle Management (DLM) transforms chaotic data environments into structured, compliant ecosystems. This comprehensive guide to Data Lifecycle Management (DLM) breaks down every stage while showing how to build policies, automate workflows, and enforce compliance. It highlights key practices like classification, storage optimization, and governance integration, helping organizations reduce risk, control costs, and maintain trustworthy, audit-ready data ecosystems.

Data is growing faster than most businesses can control, and it’s not just a storage problem anymore. It’s a governance nightmare.

From outdated spreadsheets to forgotten cloud files, most organizations are drowning in fragmented, unclassified, and untracked data. And it’s costing them: financially, operationally, and legally.

In fact, in a 2024 press release, Gartner predicts that by 2027, up to 80% of data and analytics governance initiatives will fail, not due to lack of tools, but because they’re reactive and disconnected from business needs.

That’s where Data Lifecycle Management (DLM) comes in. It’s a structured approach to managing data from creation to deletion, so you don’t just collect data, you control it.

In this guide, you’ll learn how to build a practical DLM strategy, define lifecycle stages, implement policies, and avoid the most common traps holding businesses back.

What is data lifecycle management?

Data Lifecycle Management (DLM) is a structured approach to managing data from the moment it’s created to when it’s archived or deleted. It ensures data is stored securely, used responsibly, and removed when no longer needed, improving compliance, cost-efficiency, and data quality.

DLM helps organizations govern growing volumes of data by assigning rules and controls at every stage of its journey. From collection and storage to sharing and disposal, every step is tracked and optimized for business value and regulatory compliance.

The core purpose of DLM

At its core, DLM exists to prevent data chaos. As businesses scale, data piles up across systems, teams, and tools. DLM brings order by:

- Reducing storage and infrastructure costs

- Minimizing compliance and privacy risks (e.g., GDPR, HIPAA)

- Improving data accessibility and quality

- Enabling secure, policy-driven data usage

Benefits of implementing DLM

Implementing a strong DLM framework offers both strategic and operational gains:

-

Reduced data sprawl: DLM helps eliminate duplicates, outdated files, and shadow data, reducing clutter and improving data visibility across systems.

-

Stronger compliance: Automated retention and deletion policies ensure regulatory requirements like GDPR or HIPAA are consistently met.

-

Better decision-making: With cleaner, well-governed data, teams can access trustworthy information that drives faster, more accurate insights.

-

Cost savings: By moving inactive data to cold or archival tiers, DLM optimizes storage spend and reduces infrastructure load.

-

Improved governance: Clear ownership, role-based access, and lifecycle policies promote accountability and enforce enterprise-wide data standards.

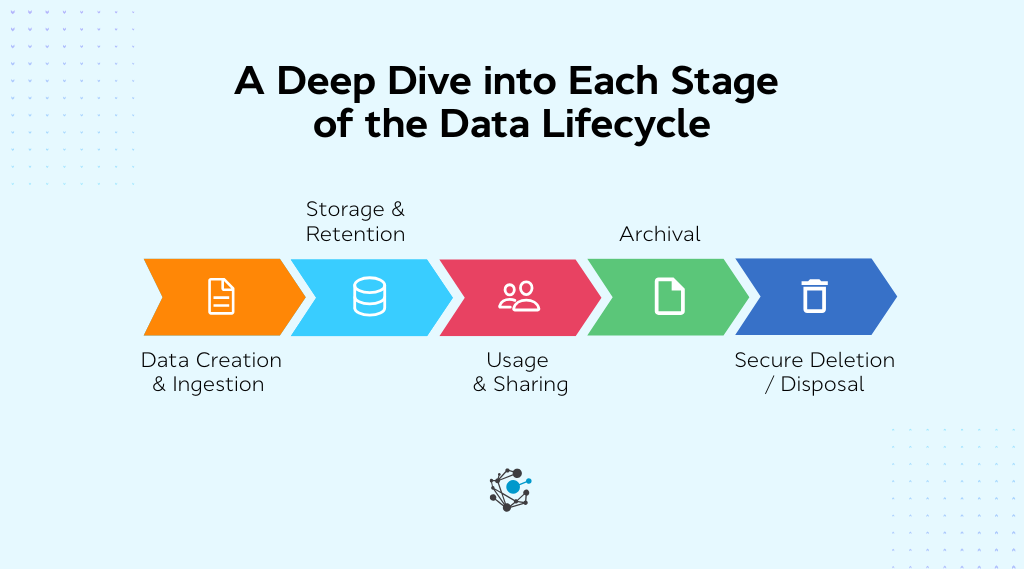

What are the stages of the data lifecycle?

The data lifecycle refers to the complete journey data takes, from the moment it's created to when it’s permanently deleted. Understanding these stages helps organizations apply the right controls, tools, and policies at each step.

Stage 1 – Data creation & ingestion

This is where data enters your ecosystem. It could come from customer forms, APIs, IoT sensors, transactional systems, emails, or even partner uploads. Some of it is generated by machines (e.g., logs), some by humans, and some is third-party licensed or acquired.

Why it matters: If you ingest incomplete, redundant, or unstructured data without tagging or validation, every downstream process, like storage, access, analytics, and security, gets compromised. Most data quality issues originate here.

Best practices:

-

Implement metadata tagging at the source to classify data by origin, purpose, and sensitivity. This supports automation later in the lifecycle.

-

Use input validation and cleansing at the point of capture to eliminate errors, duplicates, and non-standard entries.

-

Apply consistent naming conventions and business glossaries across systems to unify ingestion processes.

-

Immediately identify and flag PII or sensitive data for appropriate controls like masking and encryption.

|

Example: A retail company capturing online orders through a web form uses a tagging engine to label each entry with customer ID, order source (website, app), transaction time, and a PII flag, enabling accurate classification and access controls downstream. |

Stage 2 – Storage & retention

Once data is ingested, it needs to be stored in a location that balances performance, cost, access frequency, and compliance. This includes relational databases, data warehouses, cloud object storage, or on-premise file systems.

Why it matters: Inefficient storage design leads to high infrastructure costs, sluggish system performance, and increased security exposure. Without retention rules, data piles up indefinitely, making audits and legal response times more difficult.

Best practices:

-

Use tiered storage to align cost with data value: hot (frequent use), warm (occasional), cold (rare), and archive.

-

Set automated retention rules per data type, department, or compliance requirement (e.g., 5 years for financial records).

-

Encrypt data at rest, especially for sensitive categories like health or payment data, and rotate keys periodically.

-

Consolidate storage systems where possible to reduce data silos, making governance and lineage tracking easier.

|

Example: A financial institution stores recent transaction records in a high-speed data warehouse for daily reporting and archives data older than 90 days to encrypted object storage with 7-year retention rules. |

Stage 3 – Usage & sharing

Data is queried, processed, visualized, or exported during this phase, either by internal teams (analytics, ops, marketing) or shared with external partners, regulators, or vendors.

Why it matters: This stage is high-risk. Without proper access controls or usage policies, sensitive data can be leaked, misused, or misinterpreted. It’s also the most common source of audit findings.

Best practices:

-

Enforce granular access controls using Role-Based (RBAC) or Attribute-Based Access Control (ABAC).

-

Classify data based on sensitivity (e.g., public, confidential, restricted) and apply usage restrictions accordingly.

-

Maintain detailed access logs and share audit trails to track who accessed what, when, and how.

-

Apply dynamic masking or tokenization for sensitive fields when full access isn't needed (e.g., analytics users).

|

Example: A marketing team accessing CRM data sees only anonymized email addresses and location data for campaign segmentation, while customer support retains access to full records for service delivery. |

Stage 4 – Archival

Data that is no longer active but must be retained for legal, compliance, or historical analysis reasons is moved to long-term archival storage.

Why it matters: Archiving reduces the load on operational systems while ensuring critical records are preserved. Failure to archive can cause performance degradation or regulatory breaches.

Best practices:

-

Set archival triggers based on inactivity duration, business rules, or workflow completions (e.g., 12 months of no access).

-

Automate archival to shift data from primary to long-term storage platforms (e.g., AWS Glacier, Azure Archive).

-

Ensure discoverability as archived data must remain indexed and retrievable if needed for audits or investigations.

-

Adhere to industry-specific retention mandates (e.g., 7 years for tax records, indefinite for certain medical data).

|

Example: A healthcare system archives patient records 7 years after the last visit but keeps them indexed in a secure archive platform that allows medical-legal access when needed. |

Stage 5 – Secure deletion / Disposal

At the end of its lifecycle, data must be securely deleted, either due to expiration of its retention period or based on user request (e.g., under GDPR’s right to be forgotten).

Why it matters: Retaining expired or irrelevant data increases legal liability, storage costs, and the risk of unauthorized access. Improper deletion practices can violate privacy laws and attract heavy fines.

Best practices:

-

Use automated deletion workflows tied to retention expiration or business logic.

-

Apply certified deletion standards, such as DoD 5220.22-M or NIST 800-88, especially for sensitive or regulated data.

-

Maintain immutable deletion logs to prove when, why, and how data was removed.

-

Honor Data Subject Requests (DSRs) by enabling secure deletion across all systems, including backups and replicas.

|

Example: A SaaS platform deletes user account data three years after inactivity, triggers a secure wipe across production and backup environments, and logs the action in a centralized compliance dashboard. |

How to build a data lifecycle management policy

A Data Lifecycle Management (DLM) policy defines how data should be created, stored, accessed, archived, and deleted, ensuring consistency, reducing risks, and supporting regulatory obligations.

Here’s a step-by-step guide to build a DLM policy that’s actionable, aligned with your business needs, and ready to scale.

Step 1: Identify and classify your organization’s data

The first step in building a DLM policy is gaining full visibility into all the data your organization generates, collects, or stores. This includes structured data in databases, unstructured data in documents or emails, and semi-structured formats like JSON or XML files. Classification helps prioritize what needs stricter controls or longer retention.

Actionable steps:

-

Use automated data discovery tools to scan systems and create an inventory of all data assets.

-

Develop a data classification framework that segments data by sensitivity (e.g., Public, Internal, Confidential, Restricted).

-

Interview business stakeholders to understand where critical data lives and how it’s used daily.

Step 2: Map lifecycle stages to each data category

Different data types follow different journeys. Some may be short-lived (e.g., session logs), while others may require long-term retention (e.g., legal documents). Mapping lifecycle stages ensures your policy reflects how data flows in reality.

Actionable steps:

-

Create data lifecycle flowcharts for key data categories (customer data, employee data, financial records, etc.).

-

Define lifecycle transition triggers, like inactivity thresholds, policy expiry, or business events.

-

Align lifecycle maps with your data governance framework to ensure policy consistency.

Step 3: Set retention rules and archival guidelines

Retention is at the heart of DLM. Hold onto data too long, and you increase storage costs and legal exposure. Delete too early, and you risk losing critical history or breaching regulations. Your policy should balance legal mandates with business value.

Actionable steps:

-

Review relevant regulations (e.g., GDPR, CCPA, HIPAA) and translate them into data-specific retention timelines.

-

Establish clear archival triggers, e.g., move sales data to cold storage after 12 months of inactivity.

-

Document exceptions where data must be held beyond normal limits (e.g., ongoing litigation or audits).

Step 4: Define roles and responsibilities across teams

Policies fail when no one owns them. Assigning roles ensures accountability for maintaining, enforcing, and reviewing DLM practices. These roles often span IT, legal, security, and business teams, so clarity is key.

Actionable steps:

-

Build a RACI matrix that outlines who is Responsible, Accountable, Consulted, and Informed for each lifecycle activity.

-

Designate Data Stewards for business units to oversee implementation at the team level.

-

Integrate DLM responsibilities into existing roles like compliance officers or IT administrators to avoid duplication.

|

Defining data stewardship roles at this stage ensures ongoing ownership of data quality and compliance. Learn more in our detailed guide on Data Stewardship and how it complements lifecycle governance. |

Step 5: Draft, review, and centralize the policy document

With the framework in place, it’s time to write the actual policy. But it shouldn’t just be a dense legal doc; make it usable. Keep it readable, scannable, and centralized so teams can refer to it easily.

Actionable steps:

-

Structure the policy with clear sections: scope, definitions, lifecycle rules, responsibilities, escalation paths, and exceptions.

-

Involve legal, data governance, and business teams in the review process to ensure completeness.

-

Publish it in your internal documentation portal and promote it via onboarding, training, and internal comms.

Step 6: Pilot the policy and iterate based on feedback

Even the best-designed policies need real-world testing. Piloting allows you to uncover gaps, understand user friction, and validate your rules before organization-wide rollout.

Actionable steps:

-

Choose a single department (e.g., HR or Finance) and apply the policy to its most-used data sets.

-

Monitor key outcomes like compliance errors, archival volume, and deletion success rates over 30–60 days.

-

Collect feedback from users and stewards, then refine rules and documentation based on what’s working (and what’s not).

|

Real-World Example: Saudi Government Agency’s NDMO Compliance Journey A Saudi government entity needed to align its data operations with the National Data Management Office (NDMO) standards, a framework with 191 compliance specifications across 15 domains, including governance, classification, and personal data protection. Solution By implementing OvalEdge, the agency achieved compliance with 84 of those specifications in just 75 days, automating retention policies, access workflows, and data quality reporting. The platform’s unified catalog and metadata management helped classify and govern data across business units, meeting strict NDMO and Vision 2030 requirements. Outcomes

Takeaway This example shows how structured lifecycle policies, supported by automation and metadata governance, can transform compliance from a manual challenge into a scalable, sustainable governance practice. |

Data lifecycle management best practices

A well-written policy sets the foundation, but it’s the day-to-day execution that determines whether your Data Lifecycle Management (DLM) program thrives or falls short. Here are five essential best practices that elevate DLM from theory to a scalable, secure, and compliant system.

1. Design with governance in mind

Your DLM strategy should be tightly woven into your organization’s broader data governance framework. That means assigning clear ownership of data domains, aligning lifecycle rules with business policies, and ensuring that every stage is backed by accountability.

This governance-driven approach reduces ambiguity, supports audit readiness, and ensures lifecycle decisions are aligned with regulatory and operational goals.

2. Automate wherever possible

Manual lifecycle management doesn’t scale, and it introduces risk. Automating processes like data tagging, archival, and secure deletion ensures your policies are applied consistently, even across massive or fast-moving datasets.

Automation also frees up your teams to focus on high-value data strategy work instead of chasing outdated files or missed deletion deadlines.

3. Focus on storage optimization

Not all data deserves a prime seat in your highest-tier storage. Effective DLM includes storage tiering strategies that move data between hot, warm, and cold environments based on how frequently it’s accessed and how valuable it remains.

This not only cuts costs significantly but also improves system performance and retrieval efficiency across departments.

4. Monitor for compliance continuously

Compliance isn’t a box to tick once a year; it requires continuous monitoring. Your DLM system should generate audit logs, track policy violations, and alert you when retention rules aren’t followed or deletion fails.

Integrating DLM with your security and compliance stack helps catch issues early and strengthens trust with regulators and stakeholders.

5. Track and report lifecycle metrics

You can’t improve what you don’t measure. Tracking key metrics like data ageing, unused file ratio, archival frequency, and time-to-deletion helps teams identify bottlenecks and optimize the lifecycle flow.

Reporting on these metrics also enables leadership to see the ROI of DLM efforts and spot trends across business units.

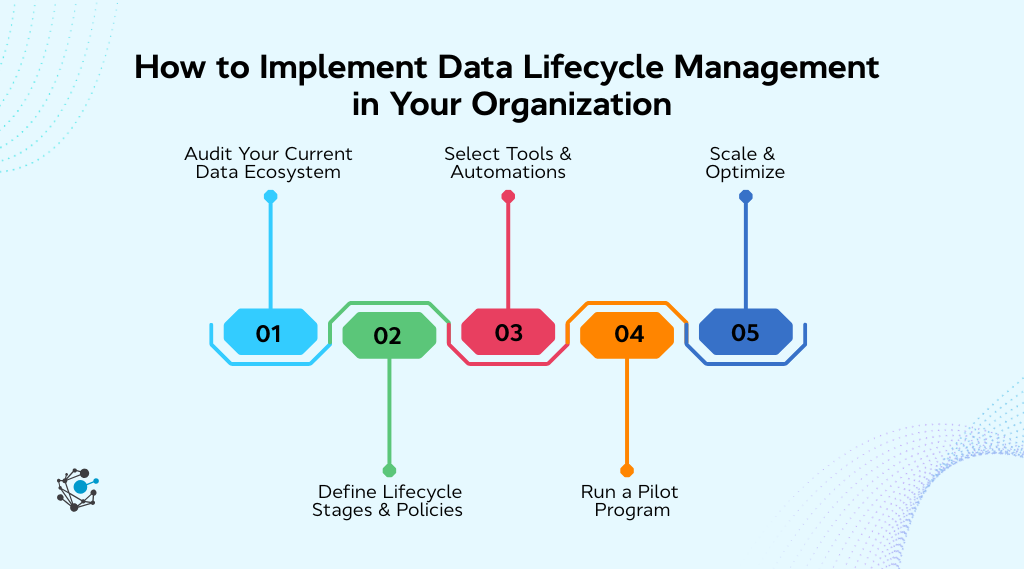

How to implement data lifecycle management in your organization

Implementing Data Lifecycle Management (DLM) requires collaboration across departments, the right tools, and a phased approach. Below is a practical, step-by-step framework to guide your organization from discovery to scalable execution.

Step 1 – Audit your current data ecosystem

Before you can manage the lifecycle of your data, you need to know what you have. A full audit uncovers where your data lives, how it’s stored, who has access, and whether it's governed or floating in silos. This step reveals compliance gaps, legacy data, and opportunities to consolidate.

Actionable steps:

-

Conduct a system-wide inventory of all data sources (databases, cloud platforms, shared drives, third-party tools).

-

Identify unstructured and shadow data. often hiding in emails, spreadsheets, or personal folders.

-

Assess access rights, tagging consistency, and ownership for each data set.

Step 2 – Define lifecycle stages and policies

With your data mapped, it’s time to define how different data types flow through the lifecycle. This means assigning retention periods, access levels, archival triggers, and deletion rules that align with legal, operational, and business needs.

Actionable steps:

-

Create lifecycle flow diagrams for each major data category (e.g., sales, HR, product).

-

Define retention timelines based on compliance requirements and internal risk appetite.

-

Map policies to departments or teams that handle the data, ensuring they’re practical and enforceable.

Step 3 – Select tools and automations

Manual lifecycle management is inefficient and error-prone. To scale, you’ll need tools that automate tagging, track metadata, enforce retention, and execute secure deletion. These tools should integrate with your existing data platforms.

Actionable steps:

-

Evaluate DLM solutions like Google Cloud DLM, AWS Lifecycle Policies, or OvalEdge for orchestration.

-

Choose tools that support policy-based automation, access control, and audit logging.

-

Set up pilot automations, for example, auto-archiving inactive CRM data after 12 months.

Step 4 – Run a pilot program

Rolling out DLM across the entire organization from day one is risky. A controlled pilot helps validate your policy, test your tools, and gather feedback before scaling. Start small, measure impact, and iterate.

Actionable steps:

-

Choose a low-risk department (e.g., internal ops or HR) to test DLM workflows.

-

Monitor KPIs like compliance violations, storage savings, and user satisfaction during the pilot.

-

Document lessons learned and update your policy or tooling configurations accordingly.

Step 5 – Scale and optimize

Once the pilot is successful, extend DLM across other teams and systems. But don’t treat it as a set-and-forget initiative. DLM must evolve with business growth, new regulations, and technology changes.

Actionable steps:

-

Roll out DLM organization-wide with onboarding sessions and team-specific training.

-

Set up a quarterly review cadence to revisit lifecycle rules, storage strategies, and compliance posture.

-

Establish a cross-functional governance council to oversee DLM adoption and drive continuous improvement.

Common challenges & pitfalls in DLM (And how to avoid them)

Even with the right tools and a clear policy, Data Lifecycle Management (DLM) can fail if foundational issues aren’t addressed. Here are the most common pitfalls and how to stay ahead of them.

1. Overlooking metadata and data lineage

Without proper metadata tagging or lineage tracking, data quickly becomes untraceable. You lose visibility into where it came from, how it was used, and whether it's still valid. This makes classification, auditing, and deletion nearly impossible and increases the risk of non-compliance.

How to avoid it:

Implement automated metadata tagging at the point of data creation. Use a data catalog to track lineage and enable searchability across systems. Regularly audit metadata health to ensure consistency and completeness.

2. Retaining everything forever

Many organizations fall into the “data hoarding” trap, keeping everything just in case. But retaining data indefinitely inflates storage costs, clutters systems, and increases exposure to regulatory violations or security breaches.

How to avoid it:

Set clear, role-specific retention policies based on business value and legal requirements. Automate archival and secure deletion. Educate teams that more data isn't always better, especially when it's outdated or irrelevant.

3. Undefined roles and responsibilities

If no one owns the data lifecycle, no one enforces it. When roles are vague or missing, lifecycle policies remain on paper while old data piles up and risky sharing persists.

How to avoid it:

Assign clear responsibilities using a RACI matrix. Designate Data Owners and Stewards for each domain, and embed DLM into onboarding, governance councils, and compliance workflows.

4. Tool fragmentation across teams

When departments use disconnected tools for storage, backup, and governance, policies become inconsistent, and version conflicts or gaps emerge. This decentralization undermines DLM integrity and creates blind spots.

How to avoid it:

Standardize on a unified DLM platform or ensure integration between tools via APIs and metadata sync. Choose tools that support policy enforcement across environments (cloud, on-prem, hybrid). Centralize monitoring for consistent oversight.

Conclusion

In today’s data-saturated world, letting information pile up without purpose isn’t just inefficient, it’s dangerous. From rising storage costs to regulatory risk, unmanaged data is a silent threat to operational agility and long-term growth.

That’s why Data Lifecycle Management (DLM) is a strategic imperative. When done right, DLM doesn’t just help you stay compliant. It improves data quality, enforces accountability, and ensures every piece of data, whether active or archived, has a clear place, owner, and outcome.

Remember: DLM isn’t a one-time cleanup project. It’s an evolving practice that must grow with your data, your teams, and your business goals. Start small if you need to: audit your current data landscape, define roles, build a simple policy, and pilot it with one department.

Ready to take control of your data lifecycle?

OvalEdge helps organizations implement DLM that’s policy-driven, automated, and scalable from day one.

Book a demo today and explore how OvalEdge can support your data lifecycle strategy.

FAQs

1. What is the difference between data lifecycle and data pipeline?

A data lifecycle refers to the full lifespan of data, from creation and storage to archival and deletion. It focuses on governance, compliance, and long-term management. In contrast, a data pipeline is a technical process that moves and transforms data from one system to another (e.g., ETL/ELT workflows).

2. Who is responsible for managing the data lifecycle in a company?

Responsibility for data lifecycle management is shared across roles. Data Owners define how data should be handled. Data Stewards ensure data quality and compliance. IT and Security teams implement tools and controls. Data Governance Leads oversee policies and alignment across the organization.

3. How does DLM help with regulatory compliance?

DLM supports compliance by enforcing structured retention, archival, and deletion rules. It ensures sensitive data is stored securely, accessed by the right users, and disposed of on time, helping organizations meet regulations like GDPR, HIPAA, SOX, and CCPA through automation and audit readiness.

4. Can DLM be implemented without a data catalog?

Technically, yes, but it's significantly harder. A data catalog simplifies DLM by enabling data discovery, classification, metadata management, and lineage tracking. It provides visibility and context, making policy enforcement more efficient and less error-prone.

5. How is DLM different in cloud vs. on-premise environments?

In cloud environments, DLM is often easier due to built-in automation for backup, tiering, and deletion. Cloud providers offer lifecycle management APIs and tools. In on-premise environments, DLM may require more manual processes or third-party integrations to achieve the same level of control and flexibility.

6. How does Data Lifecycle Management (DLM) help meet government or regulatory frameworks like NDMO, GDPR, or HIPAA?

DLM enforces structured controls over data creation, classification, storage, and deletion. By defining clear retention policies, access permissions, and audit trails, organizations can align with frameworks such as NDMO (Saudi Arabia), GDPR (Europe), or HIPAA (US). It ensures that data is only kept for as long as needed, securely stored, and deleted according to compliance standards.

Deep-dive whitepapers on modern data governance and agentic analytics

OvalEdge recognized as a leader in data governance solutions

.png?width=1081&height=173&name=Forrester%201%20(1).png)

“Reference customers have repeatedly mentioned the great customer service they receive along with the support for their custom requirements, facilitating time to value. OvalEdge fits well with organizations prioritizing business user empowerment within their data governance strategy.”

.png?width=1081&height=241&name=KC%20-%20Logo%201%20(1).png)

“Reference customers have repeatedly mentioned the great customer service they receive along with the support for their custom requirements, facilitating time to value. OvalEdge fits well with organizations prioritizing business user empowerment within their data governance strategy.”

Gartner, Magic Quadrant for Data and Analytics Governance Platforms, January 2025

Gartner does not endorse any vendor, product or service depicted in its research publications, and does not advise technology users to select only those vendors with the highest ratings or other designation. Gartner research publications consist of the opinions of Gartner’s research organization and should not be construed as statements of fact. Gartner disclaims all warranties, expressed or implied, with respect to this research, including any warranties of merchantability or fitness for a particular purpose.

GARTNER and MAGIC QUADRANT are registered trademarks of Gartner, Inc. and/or its affiliates in the U.S. and internationally and are used herein with permission. All rights reserved.

-2.png)