Table of Contents

How to Measure AI Readiness: Complete Assessment Guide 2026

📌 QUICK ANSWER

An AI readiness assessment is a systematic evaluation of an organization's preparedness to successfully adopt and implement artificial intelligence technologies.

It measures capabilities across strategy, data, infrastructure, people, and governance to identify strengths, gaps, and priorities for AI initiatives. Organizations typically score across 5 maturity levels, from Unprepared to Embedded, with data readiness being the #1 success factor.

Artificial intelligence is no longer a future technology - it's transforming how businesses operate today. According to McKinsey, 19% of B2B decision-makers are already implementing AI use cases, with 23% in development stages.

However, 80% of AI projects fail to deliver intended outcomes, and only 30% of AI pilots progress beyond the pilot stage.

The difference between success and failure? Proper preparation.

An AI readiness assessment helps organizations understand where they stand today and what they need to succeed with AI tomorrow. This guide explains how to measure your organization's AI readiness, interpret maturity levels, and take action to strengthen your AI foundation.

Why Assess AI Readiness

Before investing significant resources in AI initiatives, organizations need to understand their current capabilities and gaps. An AI readiness assessment provides this critical baseline.

Key reasons to assess AI readiness:

Avoid costly mistakes: Organizations that rush into AI without proper foundations waste millions on failed pilots. 48% of M&A professionals now use AI in due diligence (up from 20% in 2018), but those without strong data governance face compliance risks and inaccurate results.

Identify critical gaps: You might have strong technical infrastructure but weak data quality, or excellent data but insufficient AI talent. Assessments reveal which areas need attention before launching AI initiatives.

Prioritize investments: With limited budgets, knowing whether to invest in data governance, cloud infrastructure, or talent development first can be the difference between success and failure. 60% of AI success depends on data readiness - addressing data foundations before infrastructure prevents wasted spending.

Build stakeholder confidence: Executive sponsors and boards want assurance that AI investments will deliver ROI. A structured readiness assessment demonstrates due diligence and realistic planning.

Benchmark against peers: Understanding where you stand compared to industry standards helps set realistic expectations. Currently, only 23% of organizations have a formal AI strategy - if you have one, you're already ahead.

Real-world example: A mid-size investment firm wanted to implement AI-powered fraud detection. Their readiness assessment revealed scattered data across 12 systems with no unified customer view, on-premises servers insufficient for ML workloads, no data scientists on staff, and no policies for AI model monitoring.

Rather than rushing to build models, they spent 6 months strengthening foundations. When they finally deployed AI, their time-to-value was 70% faster than peers who skipped readiness assessment.

Key Pillars of AI Readiness

A comprehensive AI readiness assessment examines six interconnected pillars. Most organizations assess across 4-6 of these areas, depending on their maturity level and industry.

1. Leadership & Strategy

What this measures: Executive commitment, strategic alignment, and organizational readiness for AI transformation.

Key assessment questions:

- Is there executive sponsorship for AI initiatives?

- Does an AI strategy exist and align with business goals?

- Is a budget allocated for AI programs?

- Are success metrics and KPIs defined?

- Is there a clear vision for how AI will transform the business?

Common gaps: Many organizations have an interest in AI but lack a formal strategy. Leaders may expect immediate results without understanding the 12-24 month journey to production AI systems.

Success indicator: Executive sponsor assigned, AI strategy document approved, and budget allocated with a 3-year commitment.

2. Data Foundations

What this measures: The quality, accessibility, and governance of data needed to train and operate AI models.

This is the #1 barrier to AI success. According to industry research, 67% of organizations cite data quality issues as their top AI readiness challenge.

Key assessment questions:

- Is data cataloged and easily discoverable?

- What is your data quality score? (Target: 80%+ accuracy, completeness, consistency)

- Are data governance policies enforced?

- Is sensitive data (PII, PHI) identified and protected?

- Can teams access the data they need in real-time?

- Is data lineage documented?

Common gaps:

- Siloed data across departments and systems

- Poor data quality (duplicate records, missing values, inconsistent formats)

- No data catalog or governance framework

- Manual data preparation consumes 60-80% of data science time

Success indicator: Data governance platform implemented (like OvalEdge), data quality scores above 85%, and real-time access to critical datasets.

Real-world example: A national retail chain scored poorly on data readiness - 65% duplicate customer records, inventory updated only daily (not real-time), and no data catalog, making datasets undiscoverable.

After investing in master data management, real-time pipelines, and implementing the OvalEdge catalog, their data readiness score jumped from 40% to 85% in 6 months. They now successfully use AI for demand forecasting and personalized recommendations.

3. Technology Infrastructure

What this measures: The technical capabilities to develop, deploy, and scale AI solutions.

Key assessment questions:

- Is the cloud platform selected and configured (AWS, Azure, GCP)?

- Are scalable compute resources available for model training?

- Does an ML development environment exist?

- Is the model deployment pipeline automated?

- Are monitoring, logging, and alerting configured?

- Is network bandwidth sufficient for large data transfers?

Cloud vs. on-premises: 85% of enterprises use multi-cloud strategies as of 2025. Cloud platforms provide the elasticity and AI-specific services (SageMaker, Azure ML, Vertex AI) that make AI implementation faster and more cost-effective.

Common gaps: Legacy on-premises infrastructure that can't scale for ML workloads, lack of GPU/TPU resources for deep learning, and no MLOps pipeline for model lifecycle management.

Success indicator: Cloud-native ML platform deployed, automated CI/CD for models, production-ready infrastructure with 99.9%+ uptime.

4. Organizational Capability & Culture

What this measures: The people, skills, and cultural readiness for AI adoption.

Key assessment questions:

- Are data scientists, ML engineers hired or contracted?

- Are business analysts trained on AI concepts?

- Is there executive-level AI literacy?

- Do employees understand how AI will augment (not replace) their work?

- Are cross-functional AI teams established?

- Is there a budget for ongoing AI training?

The talent gap is significant: 52% of organizations lack AI talent and skills, making this a major readiness barrier.

Common gaps:

- No data science team (in-house or contracted)

- Business stakeholders unfamiliar with AI capabilities and limitations

- Employee resistance due to job displacement fears

- IT teams are unprepared for AI infrastructure demands

Success indicator: Hybrid team of data scientists (in-house or contracted), AI literacy program for business users, and change management plan addressing workforce concerns.

Research finding: Employees use AI tools 3x more than leaders expect - often through shadow IT (ChatGPT, personal accounts). Proper governance and training channels this energy productively.

5. AI Governance & Ethics

What this measures: Policies, processes, and controls for responsible AI development and deployment.

This pillar has become critical in 2025 with the EU AI Act, increasing regulatory scrutiny, and growing concerns about AI bias, privacy, and accountability.

Key assessment questions:

- Do AI ethics principles and policies exist?

- Are bias detection and mitigation processes defined?

- Is there a model risk management framework?

- Are regulatory compliance requirements mapped (EU AI Act, GDPR, HIPAA)?

- Is AI decision-making explainable and transparent?

- Are human oversight mechanisms in place?

GenAI adds new considerations:

- Content safety filters to prevent harmful outputs

- IP and copyright risk management

- Hallucination detection and mitigation

- Prompt injection attack prevention

Common gaps: Most organizations have general data privacy policies but lack AI-specific governance. 91% of organizations need better AI governance and transparency, according to recent research.

Success indicator: AI governance committee established, responsible AI policy published, model cards documenting AI systems, and regular bias audits conducted.

6. Use Case Identification & Value Realization

What this measures: The organization's ability to identify, prioritize, and execute high-value AI use cases.

Key assessment questions:

- Are AI use cases identified and prioritized by business impact?

- Is the ROI measurement framework defined?

- Are pilots designed with clear success criteria?

- Is there a pathway from pilot to production?

- Are stakeholders engaged and aligned?

- Is there a scaling strategy for successful AI applications?

ROI is critical: 45% of organizations struggle with unclear ROI measurement for AI initiatives. Without defined metrics, proving value becomes impossible.

Common gaps: Starting with complex, low-value use cases instead of quick wins. Pilots that run indefinitely without a path to production. No systematic approach to use case identification.

Success indicator: Portfolio of 5-10 prioritized use cases, 1-2 pilots in production with measured ROI, documented playbook for scaling successful pilots.

Generative AI Readiness Considerations

GenAI adoption grew 300% in 2024, with 75% of enterprises piloting GenAI applications. However, generative AI requires additional readiness factors beyond traditional AI:

Prompt Engineering Capability: Do teams know how to write effective prompts? This new skill is critical for GenAI success. Organizations need training programs covering prompt design, few-shot learning, and chain-of-thought reasoning.

LLM Selection and Management Understanding different models (GPT-4, Claude, Llama, Gemini) and their tradeoffs. Decisions around proprietary vs. open-source, cloud-hosted vs. on-premises, and fine-tuning vs. prompt engineering.

Responsible AI Guardrails: GenAI outputs can include biased, harmful, or inaccurate content. Organizations need:

- Content safety filters

- Factual grounding mechanisms

- Citation and source verification

- Output review processes

Data Privacy for LLMs: Ensuring training data and prompts don't leak sensitive information. Many organizations use private LLM instances (Azure OpenAI Service, AWS Bedrock) rather than public APIs to maintain data control.

IP and Copyright Risk's Generated content may inadvertently copy copyrighted material. Organizations need clear policies on reviewing and validating AI-generated content before publication.

Cost Management Token-based pricing for LLMs creates new cost challenges. Organizations need monitoring for API usage, prompt optimization strategies, and cost allocation by team or project.

Hallucination Mitigation GenAI models sometimes "make things up" with confident-sounding but false information. Strategies include retrieval-augmented generation (RAG), confidence scoring, and human-in-the-loop review.

GenAI readiness checklist:

- [ ] Prompt engineering training program established

- [ ] LLM platform selected with data privacy controls

- [ ] Responsible AI guardrails implemented

- [ ] Content review process defined

- [ ] Cost monitoring and budget alerts configured

- [ ] High-value GenAI use cases identified

Cost savings potential: Organizations report $50K-$500K annually per GenAI use case through automation of content creation, customer support, and knowledge work.

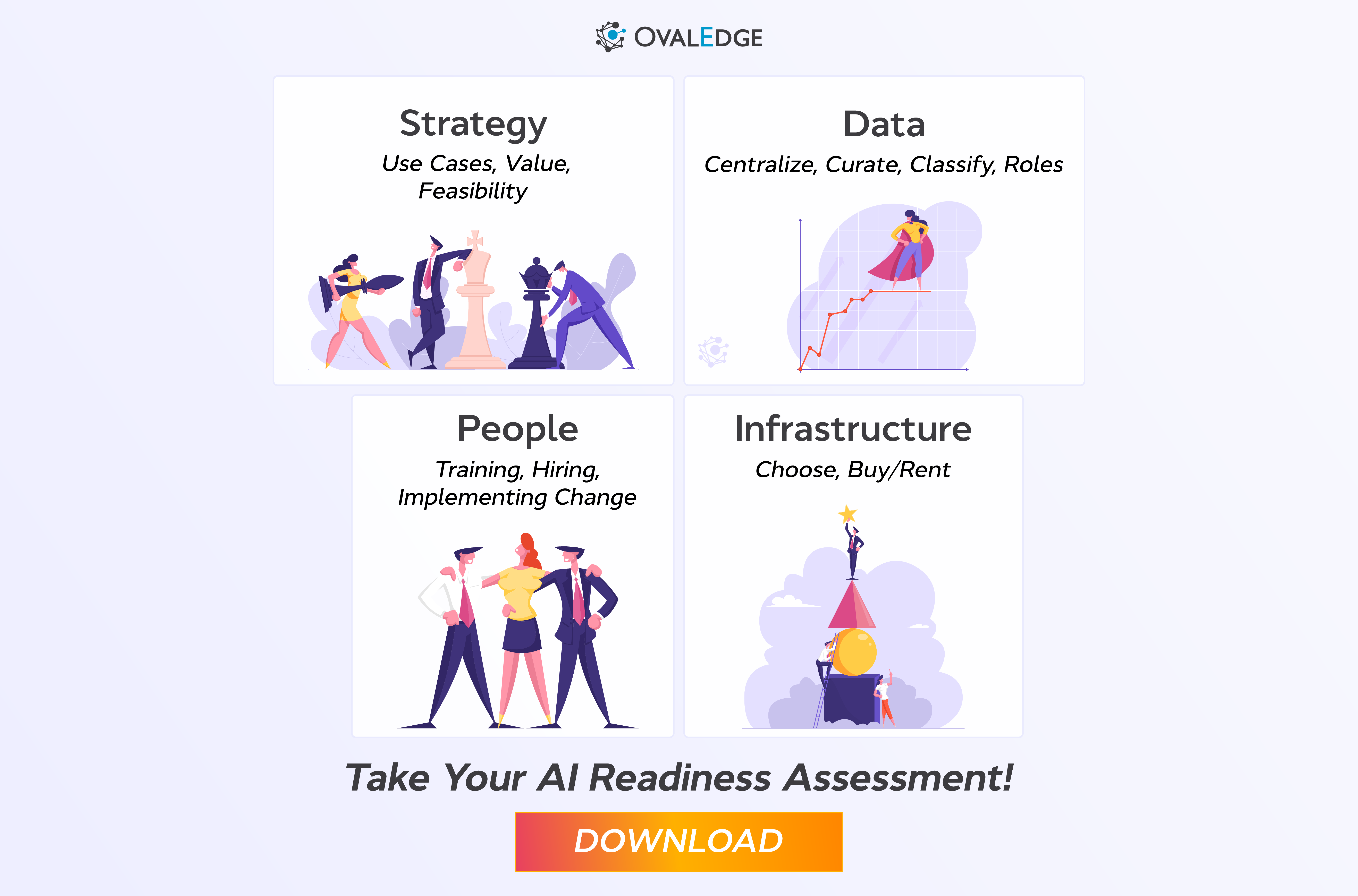

Common Areas of Assessment

Many iterations of AI readiness assessments are available, but they all ultimately assess the organization’s AI strategy, workforce preparedness, data and governance maturity, and tech infrastructure capacity. Each area’s questions add additional nuance. Here is how OvalEdge’s AI Readiness Assessment is organized.

Strategy

- AI Use Case: When adopting AI technologies, organizations must keep in mind the goals and objectives that drive their AI ambitions and fully understand why AI is an important addition to their business. Generally speaking, what is the use case for introducing AI into the organization?

People

- Train Existing IT Workforce: A strong team is necessary to execute an AI roadmap, and the need for a data-literate workforce is higher than ever. Utilizing AI models requires a solid understanding of AI’s technical, ethical, and regulatory aspects, with retraining and upskilling opportunities.

- Hire AI Specialists: Developing and implementing custom AI solutions takes experts. Hiring AI specialists might be necessary to create, implement, and maintain AI in the organization.

- Implement Change Management: Don’t forget change management! Prepare a company culture that encourages and fosters AI innovation and experimentation amongst employees. The business must be fully engaged and know how to interpret, analyze, and use data effectively.

Data

- Centralize Data: Centralizing data in a data catalog or within a data lake/warehouse ensures the most important data sources critical to the organization are identified and documented.

- Define Roles and Responsibilities: Data governance roles and responsibilities play an important part in managing the organization’s data, as these individuals are the experts in their respective domains. Clear ownership of data assets paves the path to curated metadata.

- Curate Metadata: Curating metadata provides context, consistency, and understanding of data assets. Creating correct descriptions, definitions, and metrics provides a high-quality base for training AI models.

- Ensure High-Quality Data: Poor-quality data leads to poor-performing AI. A well-rounded data quality program that pays attention to the four aspects of data quality implementation increases the trust of AI models since people can trust the data.

- Classify Data: Establish ethical AI frameworks and ensure their integrity by classifying data. Additional benefits are improved searchability and reduced privacy risks.

Infrastructure & Tech

- Find the Right Technology: You must have the right technology and platforms to support your AI ambitions. Consider the hardware, software, and scalability needed for company-wide AI initiatives.

- Buy/Rent Infrastructure: Creating tech infrastructure to accommodate the demands of AI can be costly and time-consuming. Buying or renting infrastructure opens opportunities for timely data delivery at scale.

5 Levels of AI Readiness

Most AI readiness assessments assign a score out of five, similar to measuring data governance maturity. OvalEdge’s AI Readiness Assessment uses five levels to score each area of readiness mentioned previously.

Organizations typically progress through five distinct maturity levels. Understanding your current level helps set realistic timelines and priorities.

Level 1: Unprepared

Characteristics:

- No AI strategy or only ad-hoc discussions

- Reactive to AI trends without a clear plan

- Data scattered across systems with poor quality

- Legacy infrastructure is not cloud-ready

- No AI talent on staff

- Leadership is interested but uncommitted

Typical organizations: Traditional companies just beginning to explore AI, often prompted by competitive pressure.

Timeline to next level: 6-12 months of foundational work needed.

Priority actions:

- Educate leadership on AI capabilities and requirements

- Conduct a comprehensive data inventory

- Assess current infrastructure gaps

- Begin data governance foundation

Level 2: Planning

Characteristics:

- AI strategy document exists and aligns with business goals

- Executive sponsor assigned with budget commitment

- Data identified but not yet cataloged or governed

- Infrastructure upgrade plans defined

- Beginning to hire or contract AI expertise

- Pilot projects identified but not started

Typical organizations: Companies that have secured leadership buy-in and are building foundations.

Timeline to next level: 4-8 months to launch first pilots.

Priority actions:

- Implement data governance platform (OvalEdge)

- Start cloud migration or enhancement

- Launch an AI literacy training program

- Begin hiring data science talent

- Define the first pilot project with a clear ROI

Level 3: Developing

Characteristics:

- 1-3 AI pilot projects running

- Data cataloged with improving quality scores (70-85%)

- Cloud adoption underway with ML-ready infrastructure

- Small data science team (2-5 people) in place

- Learning from pilots and refining the approach

- AI governance policies drafted

Typical organizations: Companies actively experimenting with AI and measuring results.

Timeline to next level: 6-12 months to move pilots to production and scale.

Priority actions:

- Strengthen data quality to 85%+ across critical datasets

- Establish a model deployment and monitoring pipeline

- Document lessons learned from pilots

- Expand AI talent through hiring or partnerships

- Build a business case for scaling successful pilots

Real-world example: A 200-bed hospital assessed itself at Level 2 (Planning). To reach Level 3 (Developing), they hired their first data engineer, implemented OvalEdge for data governance, piloted AI for appointment no-show prediction (achieving 80% accuracy), and established an AI governance committee.

Within 8 months, they reached Level 3 with 3 AI pilots in production and a clear roadmap for Level 4.

Level 4: Implemented

Characteristics:

- 5+ AI solutions in production delivering measurable ROI

- Well-governed, accessible data with quality scores 85%+

- Modern, scalable cloud infrastructure optimized for AI

- Established data science team (6-15 people)

- AI integrated into business processes

- Proven track record of scaling from pilot to production

- Model performance monitoring and retraining are automated

Typical organizations: AI-mature companies with successful AI programs generating business value.

Timeline to next level: 12-24 months of continuous improvement and expansion.

ROI demonstration: Organizations with strong AI readiness achieve 2-3x faster time-to-value and see 15-25% productivity gains in the first year of AI implementation.

Priority actions:

- Expand AI use cases to new departments and functions

- Develop an AI center of excellence

- Share best practices across the organization

- Invest in advanced AI capabilities (deep learning, NLP, computer vision)

- Build AI-driven products or services

Level 5: Embedded

Characteristics:

- AI drives core business decision-making

- Real-time, high-quality data accessible across the organization

- AI-optimized infrastructure platform

- Data science team integrated across business units

- Continuous AI innovation and experimentation

- AI embedded in company culture and strategy

- Industry leader in AI adoption

Typical organizations: Digital-native companies and AI leaders (Netflix, Amazon, Tesla, Spotify).

Characteristics of Level 5:

- Netflix: 80% of content watched is driven by AI recommendations

- Tesla: 1.5 billion miles per month of vehicle data powering autonomous driving improvements

- Spotify: Continuous AI experimentation culture producing innovations like Discover Weekly

Ongoing evolution: Level 5 is not an end state but continuous improvement. These organizations constantly push AI boundaries and create a competitive advantage through AI.

Quick Maturity Level Assessment

Where is your organization? Select the statement that best describes you:

□ "We're just starting to think about AI" → Level 1: Unprepared □ "We have a plan but haven't started execution" → Level 2: Planning □ "We're running our first 1-3 AI pilots" → Level 3: Developing □ "We have 5+ AI solutions in production measuring ROI" → Level 4: Implemented □ "AI is integral to how we operate and compete" → Level 5: Embedded

Your maturity level determines your next priorities. See detailed recommendations for each level above.

Maturity Level Comparison

|

Level |

Characteristics |

Data State |

Infrastructure |

AI Capability |

Timeline to Next |

|

1: Unprepared |

No AI strategy, reactive |

Siloed, poor quality |

Legacy systems |

No pilots |

6-12 months |

|

2: Planning |

Strategy defined, resources secured |

Identified, not governed |

Planning upgrades |

Research phase |

4-8 months |

|

3: Developing |

Pilots in progress, learning |

Cataloged, quality improving |

Cloud adoption started |

1-3 pilots running |

6-12 months |

|

4: Implemented |

AI in production, ROI measured |

Well-governed, accessible |

Modern, scalable |

5+ solutions live |

12-24 months |

|

5: Embedded |

AI drives decisions |

Real-time, high quality |

AI-optimized |

Continuous innovation |

Ongoing |

Common AI Readiness Challenges (and Solutions)

Based on assessments across hundreds of organizations, these are the most frequent barriers to AI success:

Challenge 1: Poor Data Quality

Problem: Data is incomplete, inconsistent, inaccurate, or duplicated. 67% cite data quality as their top barrier.

Impact: "Garbage in, garbage out" - AI models trained on poor data produce poor results. Data scientists spend 60-80% of their time cleaning data instead of building models.

Solutions:

- Implement data quality frameworks with automated validation

- Use data governance platforms like OvalEdge for quality monitoring

- Establish data stewardship with quality accountability

- Create data quality scorecards with targets (85%+ accuracy, completeness)

Success metric: Reducing data preparation time from 70% to 30% of data science effort.

Challenge 2: Lack of Executive Sponsorship

Problem: AI is treated as an IT experiment rather than a strategic business initiative. Only 23% have a formal AI strategy with exec buy-in.

Impact: Insufficient budget, resources, and cross-functional alignment. AI pilots that never scale.

Solutions:

- Educate leadership on AI ROI with industry benchmarks

- Start with quick wins demonstrating tangible value

- Develop business cases showing cost savings and revenue opportunities

- Assign an executive sponsor with P&L responsibility

Success metric: Secured 3-year budget commitment and dedicated AI team.

Challenge 3: Siloed Data Across Systems

Problem: Data locked in departmental databases and legacy systems. No unified view.

Impact: AI models lack a complete picture, leading to incomplete insights. Projects are delayed months waiting for data integration.

Solutions:

- Implement data integration strategies

- Deploy unified data platforms (data lakes, lakehouses)

- Break down organizational barriers through governance committees

- Create incentives for data sharing

Success metric: 90%+ of critical data accessible through a single platform.

Challenge 4: Insufficient AI Talent

Problem: Can't hire or afford data scientists and ML engineers. 52% lack the necessary AI skills.

Impact: Delayed initiatives, heavy reliance on expensive consultants, and inability to maintain AI systems.

Solutions:

- Upskill existing analysts through training programs

- Partner with consultancies for initial projects and knowledge transfer

- Use low-code/no-code AI platforms (Azure ML, SageMaker AutoML)

- Hire 1-2 senior people to build a team rather than a large team at once

Success metric: Hybrid team of 2-3 data scientists + upskilled analysts delivering results.

Challenge 5: Unclear ROI Measurement

Problem: Don't know how to measure AI success. 45% struggle with ROI measurement.

Impact: Can't justify continued investment or scaling successful pilots.

Solutions:

- Define success metrics before starting projects (time saved, cost reduced, revenue increased)

- Track both financial and operational KPIs

- Calculate the total cost of ownership (development + operations)

- Compare against baseline (pre-AI performance)

Success metric: ROI documented for 100% of AI initiatives with positive payback within 12-18 months.

Measuring AI ROI and Success Metrics

Beyond readiness scores, organizations need clear metrics to measure AI investment success. Proper readiness assessment reduces implementation costs by 30-40% by avoiding false starts and rework.

Financial Metrics

Cost savings from automation:

- Hours saved × hourly rate

- Example: Customer service chatbot handling 10,000 tickets monthly × $25/hour agent cost = $250K annual savings

Revenue increase:

- AI-driven upsell/cross-sell improvements

- Better pricing optimization

- Reduced churn

Error reduction costs:

- Fraud prevented

- Quality defects caught

- Compliance violations avoided

Operational Metrics

Process efficiency:

- Process time reduction (%)

- Volume handled (transactions, cases, tickets)

- Accuracy improvement (%)

Example: Document processing accuracy improves from 85% to 97% while processing time drops from 2 minutes to 30 seconds per document.

AI-Specific Metrics

Model performance:

- Accuracy, precision, recall, F1 score

- Inference latency (milliseconds)

- Data drift detection rates

- Model retraining frequency

Infrastructure:

- Training time for models

- Deployment frequency

- System uptime (target: 99.9%+)

ROI Calculation Example

Customer Service AI Chatbot:

- Implementation cost: $200K

- Annual operating cost: $50K

- Savings: 10,000 agent hours × $25/hour = $250K annually

- Additional benefit: 15% customer satisfaction improvement

- ROI: ($250K - $50K) / $200K = 100% return in Year 1

- Payback period: 9.6 months

Success metrics by use case:

- Fraud detection: False positive rate < 5%, fraud caught > 90%

- Demand forecasting: MAPE < 10% (Mean Absolute Percentage Error)

- Customer churn prediction: Precision > 70%, caught churners > 60%

- Document processing: Accuracy > 95%, time < 30 seconds per document

Organizations should define these metrics during readiness assessment, before implementation begins. This creates clear success criteria and enables objective evaluation of AI initiatives.

AI Readiness Tools and Platforms

Assessment Tools

Free interactive assessments:

- Microsoft AI Readiness Assessment (self-service, online)

- AWS AI Readiness Evaluation

- EDUCAUSE Higher Education AI Readiness

Consultancy frameworks:

- Quinnox AI Readiness Checklist (downloadable)

- AriseGTM Department-by-Department Assessment

- Custom assessments with maturity models

Data Governance Platforms (Foundation for AI)

Strong data governance is foundational to AI readiness. Essential capabilities:

OvalEdge - Data catalog, governance, lineage, and quality management

- Automated data discovery and cataloging

- Data quality monitoring and scorecards

- Lineage tracking for AI transparency

- Integration with ML platforms

Alternatives:

- Collibra - Enterprise data governance

- Alation - Data catalog with AI integration

- Informatica - Data quality and master data management

AI Development Platforms

Cloud ML platforms:

- Azure Machine Learning - Microsoft's comprehensive ML platform

- AWS SageMaker - End-to-end ML development and deployment

- Google Vertex AI - Unified ML platform

- Databricks - Lakehouse platform with MLflow

Generative AI Platforms

- OpenAI (GPT-4, GPT-4o) - Leading LLM provider

- Anthropic Claude - Advanced reasoning and safety

- Google Gemini - Multimodal AI capabilities

- AWS Bedrock - Multiple foundation models in one platform

- Azure OpenAI Service - Enterprise GPT with data privacy

Platform selection: Align with your maturity level. Start with free assessments, invest in data governance early (critical for all levels), and scale AI development platforms as readiness improves from Level 2 to Level 4.

Interpreting Your Results

After assessing across the six pillars and determining your maturity level, create an action plan based on your scores:

If You're at Level 1-2 (Unprepared to Planning):

Priority 1: Strengthen Data Foundations. Data readiness is the #1 predictor of AI success. Before investing in infrastructure or hiring data scientists:

- Implement data catalog (OvalEdge recommended)

- Establish basic data governance policies

- Begin data quality improvement program

- Document where your critical data lives

Priority 2: Secure Executive Sponsorship. Without leadership commitment, AI initiatives stall. Build the business case showing:

- Industry benchmarks (competitors' AI adoption)

- Specific use cases with ROI projections

- Resource requirements (budget, people, timeline)

- Risk of inaction (competitive disadvantage)

Priority 3: Start Small. Don't attempt enterprise-wide AI transformation. Identify 1-2 high-value, achievable use cases:

- Clear business problem (cost reduction, revenue growth)

- Available data (or easily obtained)

- Measurable success criteria

- 3-6 month timeline to results

If You're at Level 3 (Developing):

Priority 1: Move Pilots to Production Many organizations get stuck in perpetual piloting. To scale:

- Document technical requirements for production deployment

- Establish model monitoring and retraining processes

- Create handoff from data science to operations/IT

- Define success criteria for declaring pilot "production-ready"

Priority 2: Expand AI Talent Hire or contract additional data scientists, ML engineers, and MLOps specialists. Build AI capability across business units.

Priority 3: Strengthen Governance Before scaling AI broadly, establish:

- AI ethics and responsible AI policies

- Model risk management framework

- Compliance procedures (EU AI Act, industry regulations)

- Bias testing and mitigation processes

If You're at Level 4-5 (Implemented to Embedded):

Priority 1: Industrialize AI Operations

- Develop AI center of excellence

- Create reusable ML components and templates

- Automate model lifecycle management

- Build self-service AI platforms for business users

Priority 2: Expand Use Cases

- Systematic use case identification across all departments

- Portfolio management for AI initiatives

- Resource allocation optimization

- Innovation programs for emerging AI capabilities

Priority 3: AI-Driven Transformation

- Embed AI into products and services

- Use AI for strategic decision-making

- Build AI-native processes and workflows

- Create competitive advantage through AI differentiation

FAQs

1. What is an AI readiness assessment?

An AI readiness assessment is a systematic evaluation of an organization's preparedness to successfully adopt and implement artificial intelligence technologies. It measures capabilities across strategy, data, infrastructure, people, and governance to identify strengths, gaps, and priorities for AI initiatives. Think of it as a health checkup for your organization's AI foundations.

2. How long does an AI readiness assessment take?

A basic assessment takes 2-4 weeks for small to mid-size organizations. Comprehensive enterprise-wide evaluations, including detailed data audits, stakeholder interviews, infrastructure reviews, and governance assessments, take 6-10 weeks depending on organization size, complexity, and number of business units involved.

3. What are the key components of an AI readiness framework?

The six core components are:

- Leadership and Strategy - Executive sponsorship and strategic alignment

- Data Foundations - Quality, accessibility, and governance

- Technology Infrastructure - Cloud platforms, ML tools, deployment capabilities

- Organizational Capability - Skills, talent, and cultural readiness

- AI Governance - Ethics, compliance, and responsible AI practices

- Use Case Identification - Business value and ROI framework

Together, these pillars define readiness for AI operationalization. Organizations strong in all six areas achieve significantly higher AI success rates.

4. How do I know if my organization is ready for AI?

Assess your maturity across key areas:

- Do you have executive sponsorship and a clear AI strategy? (If no, you're at Level 1)

- Is your data clean, accessible, and governed? (Data quality score 80%+)

- Do you have the necessary infrastructure and talent? (Cloud platform, data science team)

- Can you articulate the expected ROI? (Defined success metrics)

Organizations at Level 2 (Planning) or higher are ready to pilot AI projects. Level 1 organizations need 6-12 months of foundation building before starting AI initiatives.

5. What's the difference between AI readiness for traditional AI vs. Generative AI?

Traditional AI readiness focuses on data quality, model training infrastructure, and deployment pipelines for specific use cases (classification, prediction, optimization).

GenAI requires additional considerations:

- Prompt engineering skills - New capability for effective LLM usage

- LLM selection criteria - Choosing appropriate models (GPT, Claude, Llama)

- Responsible AI guardrails - Content safety, bias mitigation, hallucination detection

- IP and copyright risks - Managing legal concerns with generated content

- Data privacy - Ensuring prompts don't leak sensitive information

- Cost management - Token-based pricing vs. traditional ML costs

91% of organizations need better AI governance and transparency - this becomes even more critical with GenAI's potential for harmful or biased outputs.

6. Do small businesses need AI readiness assessments?

Yes. While assessments may be less formal than enterprise evaluations, small businesses benefit from:

- Understanding data quality before investing in AI tools

- Identifying high-impact use cases with clear ROI (customer service, marketing automation, inventory optimization)

- Evaluating cloud AI platforms vs. custom development

- Planning talent needs (hire vs. contract vs. low-code tools)

Small businesses can use free assessment tools (Microsoft, AWS) and focus on 1-2 high-value use cases rather than a comprehensive AI strategy. The assessment prevents wasting budget on AI solutions that won't work due to data or infrastructure gaps.

7. What tools can help with AI readiness assessment?

Free assessment tools:

- Microsoft AI Readiness Assessment (interactive, self-service)

- AWS AI Readiness Evaluation

- EDUCAUSE Higher Education AI Readiness Framework

Consultancy frameworks:

- Quinnox AI Readiness Checklist (downloadable template)

- AriseGTM Department-by-Department Assessment Guide

- Custom assessments using the maturity model framework in this guide

Data governance platforms (addressing data readiness):

- OvalEdge - Data catalog, quality monitoring, lineage tracking

- Collibra - Enterprise data governance

- Alation - Data catalog with AI/ML integration

Choose tools based on your organization's size and industry. Start with free assessments, then invest in data governance platforms as you move from Level 1 to Level 2.

8. How often should we reassess AI readiness?

Full reassessment: Annually, or when launching major new AI initiatives

Pulse checks: Quarterly reviews of key metrics:

- Data quality scores

- Number of AI use cases in production

- Model performance metrics

- AI governance policy compliance

Reassess when:

- Organizational changes (mergers, new leadership, restructuring)

- Technology landscape shifts (new GenAI capabilities, regulatory changes)

- After the pilot project outcomes (success or failure provide learning)

- Moving between maturity levels (Level 2 → Level 3)

Continuous monitoring of data quality, model performance, and infrastructure health should be automated rather than periodic assessments.

9. What's the most common gap in AI readiness?

Data readiness is the #1 barrier. According to industry research:

- 67% cite data quality issues as their top challenge

- 60% of AI success depends on data foundations

Organizations often have:

- Poor data quality - Duplicates, missing values, inconsistent formats

- Siloed data sources - Scattered across departments and systems

- Inadequate governance - No clear ownership, policies, or quality standards

- Insufficient data accessibility - Data locked in legacy systems

Address data foundations before focusing on infrastructure. Implementing a data governance platform like OvalEdge solves cataloging, quality monitoring, lineage tracking, and access management - the core prerequisites for AI readiness.

10. How does AI readiness assessment relate to data governance?

Strong data governance is foundational to AI readiness. You cannot succeed with AI without:

Data Cataloging - Teams must find and understand available datasets. Without a data catalog, data scientists waste weeks searching for data that may already exist.

Quality Management - AI models require 85%+ data accuracy. Data governance provides quality frameworks, validation rules, and continuous monitoring.

Access Controls - Proper data access management ensures teams can access data they need while protecting sensitive information (PII, PHI).

Lineage Tracking - AI transparency and explainability require knowing where data comes from and how it's transformed. Data lineage provides this visibility.

Compliance Frameworks - AI must comply with GDPR, HIPAA, and industry regulations. Data governance establishes policies and audit trails.

OvalEdge's data governance platform addresses these AI readiness prerequisites, positioning organizations to move from Level 1-2 (Unprepared/Planning) to Level 3 (Developing) by establishing the data foundation needed for successful AI.

Next Steps: Your AI Readiness Journey

Now that you understand AI readiness assessment, take these concrete actions:

Immediate Actions (This Week):

- Complete Quick Self-Assessment Use the maturity level quick check earlier in this guide to determine your current level (1-5). Be honest about gaps rather than aspirational.

- Download the AI Readiness Checklist Our free 50-point assessment checklist provides structured evaluation across all six pillars with scoring guidance.

- Identify Your #1 Readiness Gap Most organizations have one critical barrier:

- No executive support? Build a business case

- Poor data quality? Start a data governance program

- No infrastructure? Evaluate cloud platforms

- No talent? Plan hiring or training strategy

This Month:

- Conduct Stakeholder Interviews

Talk to:

- Business leaders on AI strategy and use cases

- IT teams about infrastructure capabilities

- Data teams about data quality and access

- End users about process pain points AI could address

- Assess Your Data Foundations This is the #1 predictor of AI success:

- Inventory critical data sources

- Measure data quality (accuracy, completeness, timeliness)

- Evaluate governance policies and enforcement

- Identify data accessibility barriers

- Define 1-2 Pilot Use Cases Select based on:

- Clear business value (cost reduction or revenue increase)

- Available or easily obtainable data

- Achievable with current resources

- Measurable success criteria

- 3-6 month timeline

This Quarter:

- Strengthen Data Governance Implement a data catalog and governance platform (OvalEdge recommended):

- Automated data discovery and cataloging

- Data quality monitoring

- Lineage tracking for AI transparency

- Access control management

- Build AI Literacy Train business stakeholders on:

- AI capabilities and limitations

- Use case identification

- ROI measurement

- Responsible AI considerations

- Launch First Pilot If you're at Level 2 (Planning):

- Start with a supervised learning use case (easier than deep learning)

- Partner with a consultancy if lacking internal expertise

- Define clear success metrics upfront

- Plan for production deployment from day one (avoid perpetual pilots)

Deep-dive whitepapers on modern data governance and agentic analytics

OvalEdge recognized as a leader in data governance solutions

.png?width=1081&height=173&name=Forrester%201%20(1).png)

“Reference customers have repeatedly mentioned the great customer service they receive along with the support for their custom requirements, facilitating time to value. OvalEdge fits well with organizations prioritizing business user empowerment within their data governance strategy.”

.png?width=1081&height=241&name=KC%20-%20Logo%201%20(1).png)

“Reference customers have repeatedly mentioned the great customer service they receive along with the support for their custom requirements, facilitating time to value. OvalEdge fits well with organizations prioritizing business user empowerment within their data governance strategy.”

Gartner, Magic Quadrant for Data and Analytics Governance Platforms, January 2025

Gartner does not endorse any vendor, product or service depicted in its research publications, and does not advise technology users to select only those vendors with the highest ratings or other designation. Gartner research publications consist of the opinions of Gartner’s research organization and should not be construed as statements of fact. Gartner disclaims all warranties, expressed or implied, with respect to this research, including any warranties of merchantability or fitness for a particular purpose.

GARTNER and MAGIC QUADRANT are registered trademarks of Gartner, Inc. and/or its affiliates in the U.S. and internationally and are used herein with permission. All rights reserved.