Table of Contents

AI Data Management: Key Components and Best Practices for 2026

AI data management is a comprehensive approach that uses artificial intelligence technologies to automate, optimize, and improve data management processes. Its core objective is to handle both structured and unstructured data more effectively, boosting efficiency, security, and compliance, while minimizing human error. AI assists in various tasks like data discovery, cleaning, classification, and governance, making it easier for organizations to process vast amounts of data quickly and accurately. The ultimate goal is to ensure real-time data processing, provide actionable insights, and streamline workflows to support smarter decision-making.

Is your data scattered across multiple systems, making it difficult to get a clear picture?

Imagine a healthcare organization where patient data is split between various departments like appointments, billing, and medical records. Each department has its own system, causing delays and discrepancies when trying to access complete patient histories.

According to McKinsey’s 2024 Master Data Management Survey, 62% of organizations don’t have a solid process for integrating new and existing data, making it harder to resolve data silos and unify their information.

This fragmentation limits the ability to make informed decisions quickly.

In data management, this disorganization is a major bottleneck. It hampers collaboration, slows down insights, and increases risks.

This is where AI data management can help. With AI-driven solutions, businesses can automate data processes, integrate diverse data sources, and eliminate silos, enabling real-time insights and better decision-making.

In this blog, we’ll discuss how AI can improve your data management strategy and break down barriers for a more streamlined approach.

What is AI data management?

AI data management leverages artificial intelligence to automate and optimize data processes, improving efficiency, security, and compliance. By integrating machine learning, automation, and advanced analytics, AI systems help businesses manage both structured and unstructured data more effectively.

This approach ensures real-time data processing, enhances data quality, and provides actionable insights. AI data management is essential for organizations looking to scale their data operations, reduce human error, and drive smarter decision-making. With AI's ability to streamline data workflows, companies can unlock new opportunities and ensure more secure, reliable data management across platforms.

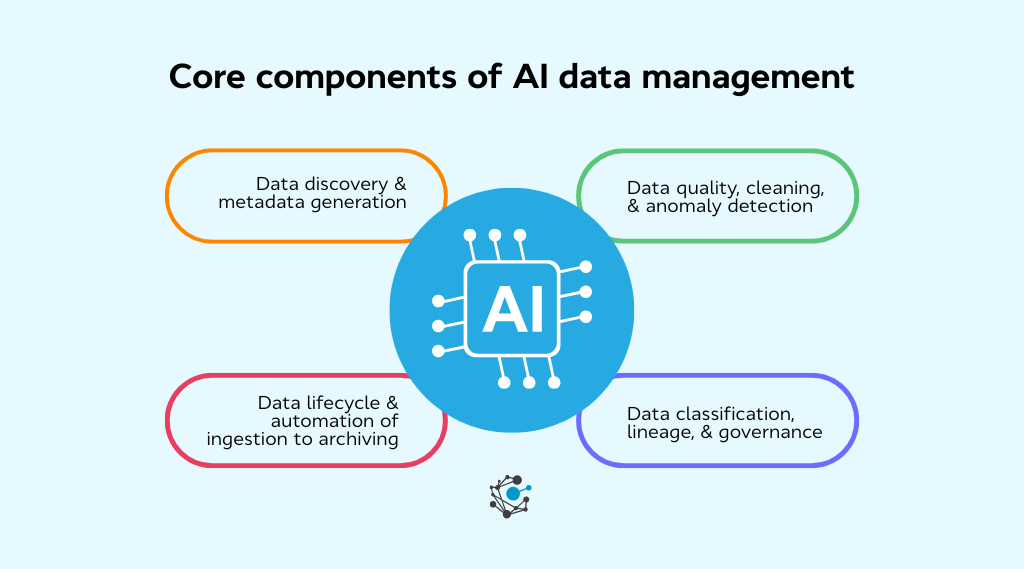

Core components of AI data management

As organizations deal with increasing data volumes, AI enables the scalable management of both structured and unstructured data.

Below are the core components of AI data management that make it a game-changer for businesses across industries.

Data discovery & metadata generation

Data discovery involves identifying, cataloging, and classifying data from a wide range of sources across cloud environments, on-premise databases, and hybrid infrastructures. While traditional data discovery relies heavily on manual tagging and categorization, AI accelerates this process by automatically generating rich metadata (key information that helps users understand the content and context of their data).

AI systems do this by scanning datasets for meaningful characteristics such as data type, business relevance, usage frequency, and relationships to other data points.

According to McKinsey’s 2024 Survey on Master Data Management, 80% of responding organizations report that some of their divisions still operate in silos, each with its own data management practices, source systems, and consumption behaviors.

This fragmentation can create significant challenges in data discovery, making it difficult to access and integrate data across the organization.

By automating metadata generation, AI eliminates time-consuming manual work and improves the comprehensiveness and accuracy of data inventories.

This makes it easier for teams to quickly access and understand the data they need, without relying on complex or outdated documentation systems.

|

For example, in a large enterprise, multiple teams might need access to customer data for different reasons, such as sales teams for targeting, marketing teams for segmentation, and compliance teams for auditing. AI can automatically tag this data with metadata like "customer_id," "transaction_date," or "location" and assign access controls based on team roles. By automating this process, businesses can ensure that everyone has access to the right data, with a clear understanding of when they need it. |

Data quality, cleaning, & anomaly detection

One of the most persistent challenges in data management is ensuring the accuracy, consistency, and cleanliness of data. Poor data quality can lead to faulty analytics, incorrect business decisions, and even compliance violations.

According to Forrester Research’s Data Culture & Literacy Survey 2023, over 25% of global data and analytics professionals report that poor data quality is a significant barrier, estimating that their organizations lose more than $5 million annually as a result.

These figures underscore the critical need for effective data quality management.

AI helps overcome this by leveraging machine learning models that continuously clean and monitor data in real time.

AI systems can identify and correct common data quality issues, such as duplicate entries, missing values, and formatting inconsistencies, often before these issues impact downstream processes.

|

For example, in customer data management, AI can detect when two different records belong to the same customer (i.e., a duplicate), or if a critical customer detail like an email address is missing. AI can automatically standardize data formatting, such as converting all dates to a consistent format, and remove redundant data entries, ensuring that the dataset is accurate and streamlined. |

Beyond these improvements, AI also plays a crucial role in anomaly detection. Data anomalies are irregularities that may indicate errors, fraudulent activity, or changing trends in data patterns.

Traditional methods of anomaly detection are reactive, meaning they only flag issues once they have already disrupted processes. AI-driven anomaly detection is proactive. It can continuously monitor data as it flows through systems, identifying unusual patterns or shifts in real-time.

|

For instance, if an AI system notices that sales numbers have drastically declined in a particular region, it can flag this as a potential issue and trigger a review, before any significant business impact occurs. |

In highly regulated industries, such as healthcare or finance, AI-powered anomaly detection is vital for ensuring compliance with regulations.

|

For example, it can help detect discrepancies in financial transactions or unusual access to sensitive patient data, which could indicate potential breaches. |

Data classification, lineage, & governance

Data classification refers to the categorization of data based on its sensitivity, value, and usage. AI plays a crucial role in automating the classification process, which is particularly important for organizations managing large volumes of sensitive data.

|

For instance, personal data, credit card details, or confidential business strategies all need to be classified and protected differently. |

AI systems use natural language processing (NLP) and machine learning algorithms to assess the context and sensitivity of data. They can identify personally identifiable information (PII), intellectual property, and other protected categories of data.

By automatically tagging this data, AI ensures that appropriate access controls are enforced based on its classification. This reduces the burden on IT and security teams and helps businesses comply with data privacy laws like GDPR or CCPA.

Data lineage, on the other hand, refers to tracking the flow and transformations of data as it moves through systems. AI creates visual lineage graphs that show the full journey of data, from its origin to where it is stored, modified, and used. This visibility is essential for ensuring data integrity, auditing purposes, and compliance.

|

For example, if a healthcare organization needs to demonstrate how patient data is being used across different departments, AI-powered lineage tracking can provide a clear, real-time map of this data flow, improving transparency and auditability. |

Governance is an essential aspect of data management, particularly in industries where data privacy and compliance are critical. AI tools assist by automating compliance checks and ensuring that data governance policies are consistently followed.

|

For example, in an organization with vast amounts of customer data, AI can automatically enforce rules on who has access to specific data based on roles or compliance requirements. This reduces the risk of human error and enhances overall governance processes. |

Data lifecycle & automation of ingestion to archiving

AI significantly enhances the management of the data lifecycle, spanning from the initial ingestion of data to its eventual archiving or deletion. This process, when automated with AI, ensures that data is stored efficiently, easily accessible when needed, and appropriately archived when it becomes inactive.

AI helps businesses streamline and automate the entire data ingestion process. Data coming from various sources (e.g., external APIs, IoT devices, transactional systems) can be ingested automatically, processed, and stored in the appropriate systems.

|

For example, AI can analyze incoming data to determine its structure, format, and relevance, ensuring it is routed to the correct storage location (whether on-premise or cloud) with minimal human intervention. |

One of the key benefits of AI in data lifecycle management is storage tiering. Data that is frequently accessed (such as active customer records) can be kept in high-performance storage systems for fast access, while less frequently used data (e.g., historical transaction records) can be moved to cost-effective, lower-performance storage.

AI can automate these decisions by analyzing usage patterns and establishing rules for when data should be moved to a different storage tier. This ensures that businesses can manage their data at scale while keeping costs under control.

Moreover, when data becomes obsolete or outdated, AI can trigger automated archiving processes.

|

For example, financial data that no longer meets compliance requirements or has reached its retention limit can be archived or purged in a manner that meets legal and regulatory standards. This automation reduces manual oversight and ensures that data management processes are always compliant and cost-effective. |

With AI-driven automation and intelligence, organizations not only improve their data management efficiency but also reduce operational costs, increase data accessibility, and ensure compliance with ever-evolving regulations.

The potential for AI to streamline data processes across the entire lifecycle, from ingestion to archiving, means that businesses can focus more on strategic decision-making and less on data maintenance, ultimately gaining a competitive edge in their respective industries.

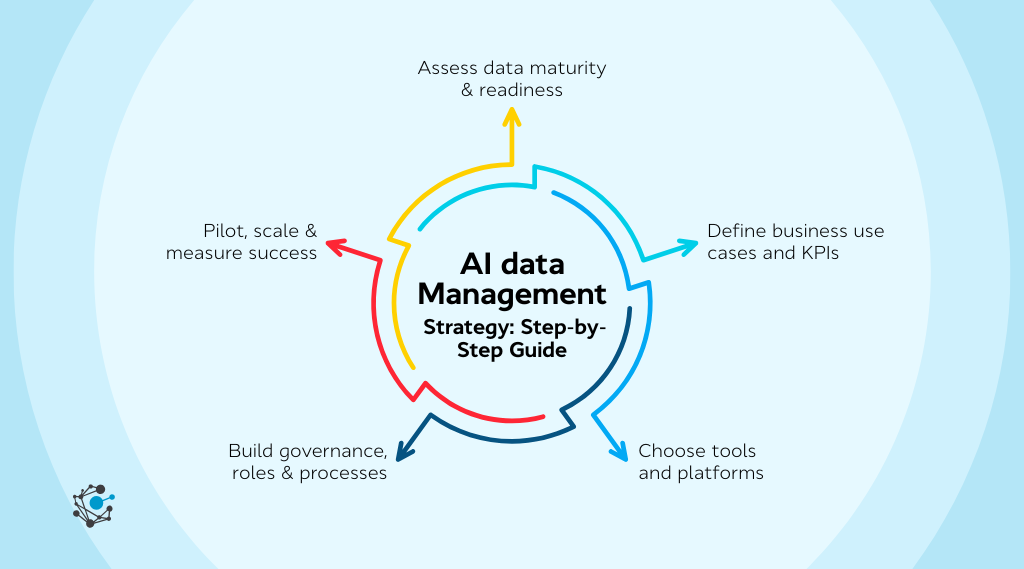

AI data management strategy: step-by-step guide

Adopting AI for data management requires a comprehensive, structured approach that aligns with business goals, technological capabilities, and regulatory frameworks. Implementing it demands careful planning, investment in the right tools, and ongoing refinement.

According to a 2024 Gartner Survey on Modern Data & Analytics Governance, by 2027, 60% of organizations will fail to realize the value of their AI use cases due to incohesive data governance frameworks.

This highlights the critical importance of integrating a robust governance strategy from the outset. Without clear data governance and an organized framework for managing AI-driven data processes, even the most advanced AI tools will struggle to deliver measurable results.

Below, we explore a detailed AI data management strategy that businesses can follow to effectively integrate AI into their data management operations.

Step 1: Assess data maturity & readiness

Before diving into the implementation of AI data management, it's crucial to assess your organization’s data maturity. This is a foundational step, as it allows businesses to understand where they currently stand in terms of data governance, data infrastructure, and analytics capabilities.

A clear understanding of data maturity helps organizations set realistic expectations, identify gaps, and establish a roadmap for success.

What does a data maturity assessment involve?

-

Data infrastructure evaluation: Assess your current systems, whether cloud-based, on-premise, or hybrid.

-

Are your data storage and processing systems capable of handling AI-driven workflows?

-

AI requires high-quality, structured data, and an infrastructure that can support fast data processing.

-

-

Metadata standards: Metadata is the backbone of AI systems. AI-driven tools need accurate, standardized metadata to make sense of data. You should evaluate how well metadata is currently being generated, stored, and used.

|

For example, if metadata is incomplete or inaccurate, AI algorithms will struggle to find patterns or automate processes effectively. |

-

Data governance practices: Governance is critical, especially when dealing with AI. Your existing governance structure should align with AI-driven approaches. This includes ensuring that data privacy, security, and ethical considerations are incorporated at the outset.

AI can help automate compliance checks, but governance protocols must be in place to ensure the technology adheres to regulations such as GDPR, HIPAA, or CCPA.

-

Automation readiness: Evaluate the level of automation your organization is comfortable with. Some businesses may need a phased approach, starting with automation in specific areas like data cleaning or reporting.

For others, fully automated AI solutions might be the goal from the start. The readiness for AI automation will depend on the organization's current workflow and the complexity of the tasks to be automated.

|

A large healthcare provider looking to implement AI might first assess its data infrastructure, which includes Electronic Health Records (EHR) systems, patient data privacy standards, and the speed at which data is transferred across departments. By understanding its data maturity, the organization can prioritize AI initiatives, such as improving data quality for predictive analytics or enhancing the automation of patient billing processes. |

Step 2: Define business use cases and KPIs

AI is a versatile tool, but to achieve tangible benefits, it’s crucial to focus on areas that have a clear return on investment (ROI). Use cases should align with business priorities and address specific pain points. These could range from improving data accuracy to enhancing compliance reporting or accelerating decision-making.

Choosing the right use cases:

-

Compliance automation: For industries like finance and healthcare, AI can be used to automate compliance-related tasks, such as monitoring transactions for fraud or ensuring that healthcare records comply with privacy regulations.

-

Data quality improvement: AI can continuously monitor data pipelines for inconsistencies, missing values, or data anomalies, ensuring the quality of data used for decision-making and analytics.

-

Analytics and decision support: AI can enable more efficient data analysis, allowing for faster insights.

|

For example, AI can be used to generate real-time business intelligence dashboards, which can significantly improve decision-making speed in fast-paced industries like e-commerce or telecommunications. |

Once the use cases are defined, it's essential to establish measurable KPIs to track the success of AI implementation. KPIs could include:

-

Reduction in data errors: For example, tracking how AI’s automated data cleaning impacts the number of data quality issues.

-

Faster time to insights: For use cases around business intelligence, measuring how AI accelerates the speed at which insights are generated from raw data.

-

Cost savings: AI can reduce operational costs by automating repetitive tasks. Measuring cost reduction in areas such as manual data cleaning or report generation will help demonstrate ROI.

|

For example, a manufacturing company looking to enhance its predictive maintenance system might define KPIs such as the reduction in machine downtime, cost savings from optimized maintenance schedules, and improvement in data quality for predictive models. |

Step 3: Choose tools and platforms

The choice of AI tools and platforms is critical to the success of the data management strategy. Many AI-driven data management tools are available, but selecting the right platform involves more than just picking the most popular solution.

You need to consider your organization’s specific requirements, such as scalability, ease of integration, and AI-native features.

Key factors to consider when selecting tools:

-

Scalability: Choose platforms that can grow with your business. As data volumes increase, AI systems should be able to scale to handle more data and more complex processes. Cloud-based platforms are often preferred for their ability to scale on demand.

-

Integration with existing systems: Many organizations already have complex data ecosystems, so it's important that new AI tools can integrate seamlessly with existing systems, whether they are legacy systems or cloud-based platforms. Look for tools that offer flexible integration options, such as APIs or pre-built connectors.

-

AI-Native features: Not all data management platforms are built with AI in mind. Ensure that the platform you choose includes AI-powered features, such as automated data cleaning, anomaly detection, and predictive analytics.

-

Governance and Security: Security and data governance must be built into the platform. Since data privacy is a major concern, especially for businesses in healthcare, finance, and e-commerce, ensure that your platform supports strong governance models, like role-based access control (RBAC), audit trails, and compliance management.

|

For example, a retail business looking to personalize customer recommendations might opt for an AI-driven platform with built-in machine learning algorithms that can integrate with its existing CRM system, ensuring that the AI can access and process customer data without disrupting current workflows. |

Step 4: Build governance, roles & processes

Effective data governance is essential in any AI-driven data management strategy. AI systems often work autonomously, but governance ensures they operate within the boundaries set by business policies, ethical guidelines, and regulatory requirements.

Establishing clear governance processes and roles will provide transparency and accountability for AI systems.

Critical components of data governance include:

-

Data ownership and stewardship: Clearly define who owns and is responsible for data across the organization.

|

For example, data stewards should be designated to ensure the quality, accessibility, and accuracy of data in their respective domains. |

-

Approval workflows: Establish workflows that define how data is accessed, used, and approved for various tasks. This ensures that only authorized personnel can make decisions based on sensitive data.

-

Compliance and ethical standards: AI tools must adhere to regulatory requirements like GDPR, HIPAA, and CCPA. Governance frameworks should automate compliance checks to ensure AI-driven processes respect privacy laws and data protection standards.

-

Transparency and accountability: Especially when using AI to make automated decisions, it is important to ensure that those decisions are explainable and auditable. This is particularly critical in sectors such as finance or healthcare, where AI decisions need to be explainable to both regulators and stakeholders.

Step 5: Pilot, scale & measure success

A pilot project provides valuable insights into how AI will perform in real-world conditions and allows organizations to test the new processes without full-scale deployment.

Key actions during the pilot phase:

-

Define success metrics: Prior to the pilot, establish clear metrics for success.

|

For example, if the goal is to automate data cleaning, measure the reduction in manual data cleaning time and the improvement in data accuracy. |

-

Stakeholder alignment: Engage stakeholders early in the pilot phase. This ensures that there is alignment across departments on the expected outcomes and the methodology used to evaluate success.

-

Refinement: After evaluating the pilot results, refine the AI models, governance processes, and workflows. It's crucial to address any shortcomings discovered during the pilot phase before scaling across the organization.

Once the pilot proves successful, the next step is to scale the AI implementation. Scaling should be done in stages, gradually expanding to other departments, data sources, and use cases, ensuring that the processes can be repeated and refined along the way.

This structured approach to AI data management enables businesses to make data-driven decisions faster, reduce operational costs, and position themselves as leaders in their industries.

Challenges with AI data management

Despite its many benefits, implementing AI in data management also comes with a range of challenges that organizations must address. Below, we explore these key obstacles and provide deeper insights into how businesses can navigate them.

Complex integration with legacy systems

Many organizations still operate on legacy systems and outdated technologies that were not designed to work with modern AI platforms. This presents a major hurdle for businesses looking to implement AI data management solutions, especially when dealing with large volumes of data housed in older systems.

The complexity arises from the need to bridge the gap between these legacy systems and the advanced AI-driven tools that require real-time access to data.

Legacy systems often store data in outdated formats, use inefficient databases, and lack the necessary processing power to support modern AI algorithms. As a result, integrating these older systems with new AI solutions can be a slow, resource-intensive process. Furthermore, there's a risk that attempting to integrate new AI tools with legacy infrastructure may disrupt ongoing business operations, causing downtime or data inconsistencies.

A strategic approach is needed for integrating legacy systems with AI-driven data management solutions. Businesses can use a hybrid integration model, where existing systems are gradually connected to new platforms. This can be achieved through middleware or by adopting a phased migration approach.

|

For instance, companies might begin by integrating data pipelines that bring data from legacy systems into cloud storage or a data lake, where it can be processed by AI tools. Over time, businesses can replace outdated systems with more modern, AI-friendly platforms, reducing operational risks. |

Additionally, organizations can leverage AI connectors or ETL (Extract, Transform, Load) tools to map and convert data from old formats into new, AI-compatible ones. While this process can be time-consuming, it ensures that businesses can start reaping the benefits of AI without requiring a full overhaul of their entire IT infrastructure.

|

For example, a financial institution with decades of transactional data stored in a legacy mainframe system might use AI-driven data management tools to extract and clean data, then gradually migrate this data into cloud storage for more advanced analytics and predictive modeling. |

Unstructured & multi-format data handling limitations

AI systems are particularly powerful when working with structured data (data that fits neatly into tables or databases with rows and columns, such as customer records or transaction logs). However, the vast majority of data in most organizations is unstructured (text documents, social media posts, audio files, video content, images, and sensor data).

AI systems often struggle with unstructured data because they require specific formatting to generate meaningful insights.

|

For example, while an AI model might efficiently analyze structured data in a sales database, it will face difficulties when tasked with analyzing unstructured text in customer reviews, emails, or audio files. The complexity increases when businesses handle multi-format data, where data might be in diverse forms, like images, raw text, or video files, each requiring unique processing techniques. |

To address these challenges, AI tools need to use natural language processing (NLP) for text, image recognition models for pictures, and speech-to-text algorithms for audio. These tools can help process unstructured data into formats that AI models can understand.

In addition, businesses can use data preprocessing pipelines that clean and standardize data before it’s input into AI models.

Advanced feature extraction methods are also crucial for AI systems to effectively handle unstructured data.

|

For instance, sentiment analysis can be used on text data to understand customer opinions, while object recognition techniques can be applied to images to identify key elements. By developing specialized AI models tailored to handle each data type, businesses can gain deeper insights from unstructured data, improving the overall data management process. |

Explainability, transparency, and algorithmic accountability

As AI-driven systems become more integrated into data management workflows, ensuring transparency and accountability in AI decision-making becomes a significant concern. AI systems often operate as "black boxes," where the internal decision-making processes are not easily understood by humans.

This lack of transparency raises issues around explainability, which is especially critical in sectors like healthcare, finance, and legal industries, where decisions made by AI systems can have significant consequences.

Businesses may find it challenging to trust AI systems when the reasoning behind decisions is not clearly explainable. This lack of clarity can be problematic for stakeholders who need to ensure AI decisions align with business goals, compliance regulations, and ethical standards.

|

For example, if an AI model used for fraud detection flags a legitimate transaction as suspicious, the business must understand why the model made that decision to ensure it’s accurate and fair. Without explainability, organizations could face regulatory scrutiny or even legal challenges. |

To address these concerns, businesses need to adopt explainable AI (XAI) methodologies. These approaches make AI decisions more transparent by providing human-understandable explanations for how and why a model arrived at a particular conclusion.

Model-agnostic methods, such as LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations), can be used to explain the predictions of any AI model in a way that’s understandable to non-technical stakeholders.

Furthermore, organizations should ensure that AI models are subject to ethical review processes and that accountability frameworks are in place to monitor model behavior over time. This might include continuous auditing, where the decisions made by AI systems are reviewed for fairness and compliance regularly.

By establishing robust auditing and reporting mechanisms, businesses can ensure that AI models operate with integrity and transparency.

Sensitive data classification & access control limitations

Sensitive data classification is a critical aspect of data management, particularly for industries that handle confidential or personal data, such as finance, healthcare, and law.

While AI systems can help automate the classification of sensitive data, there are inherent risks involved in ensuring that sensitive information is properly categorized and protected from unauthorized access. Misclassifying sensitive data could lead to significant compliance risks and legal ramifications.

AI models can sometimes misclassify sensitive data, especially when dealing with large, complex datasets that require a nuanced understanding.

|

For instance, an AI system might incorrectly classify a customer’s personal information as non-sensitive, allowing it to be accessed by employees or systems without appropriate authorization. This could lead to breaches of privacy, violating regulatory frameworks like GDPR or HIPAA. |

To mitigate these risks, businesses must implement layered access controls and robust data validation processes alongside AI models. AI systems should be designed with built-in checks to validate the classification of sensitive data, flagging potential misclassifications before they result in access violations.

Human oversight is also crucial, ensuring that AI decisions are regularly reviewed by data privacy experts who can confirm that sensitive data is properly classified and secured.

Additionally, AI tools should integrate with role-based access control (RBAC) systems to ensure that only authorized personnel can access sensitive data. Implementing data encryption and data masking techniques is also essential to ensure that sensitive data remains protected throughout its lifecycle.

With careful planning, a focus on transparency, and a commitment to ethical data handling, businesses can leverage AI to enhance their data management strategies and drive meaningful business outcomes.

Conclusion

With legacy systems and manual processes, traditional data management often leads to:

-

Fragmented data

-

Slow reporting, and

-

Missed opportunities.

The lack of integration across systems creates inefficiencies and makes it difficult to extract actionable insights in real time.

-

How many critical decisions are delayed because your data is scattered across different departments?

-

Is your organization spending more time managing data than using it for strategic advantage?

-

How much data is going underutilized because it’s too difficult to access or analyze?

According to a 2023 Forrester Research on Data & Analytics, fewer than 10% of organizations are fully “advanced” in their insights-driven capabilities.

This highlights the fact that most organizations are still struggling to realize the full potential of their data.

This is where AI data management comes in. AI enables organizations to automate data workflows, improve accuracy, and create a unified view of all their data. With AI, businesses can break free from the limitations of traditional data management and start making faster, more informed decisions.

Ready to break down data silos and streamline your data management?

OvalEdge offers an AI-powered, intuitive platform that simplifies data governance, accelerates deployment, and ensures fast adoption across your organization.

Book a demo and see how OvalEdge can transform your data management strategy!

FAQs

1. What is the difference between AI data management and traditional data management?

AI data management uses machine learning and automation to optimize, secure, and scale data processes. In contrast, traditional data management often relies on manual methods for cleaning, integrating, and analyzing data, which can be slower and less efficient.

2. What are the types of AI data management?

AI data management includes predictive analytics, data integration, data governance, and anomaly detection. Each type focuses on automating and optimizing specific aspects of data workflows, ensuring real-time processing, high data quality, and compliance with regulations.

3. What are the key pillars of AI data management?

The main pillars of AI data management are automation, scalability, data quality, governance, and integration. These pillars enable businesses to streamline data processes, improve decision-making, and ensure secure, compliant data management across platforms.

4. What is the role of cloud-based AI in data management?

Cloud-based AI platforms enable scalable, cost-effective data management by offering flexibility and on-demand resources. These platforms allow businesses to store, process, and analyze large datasets without the constraints of on-premise infrastructure, ensuring that AI models can scale as data volumes grow.

5. How can AI help with data integration across systems?

AI simplifies data integration by automating the process of mapping and merging data from different sources, including on-premise systems, cloud storage, and third-party platforms. This allows businesses to create a unified data environment, making it easier to access and analyze comprehensive data.

6. How do AI models predict data trends in data management?

AI models predict data trends by analyzing historical data and identifying patterns and correlations. Machine learning algorithms then use these insights to forecast future trends, enabling businesses to adjust their strategies and operations based on data-driven predictions proactively.

Deep-dive whitepapers on modern data governance and agentic analytics

OvalEdge Recognized as a Leader in Data Governance Solutions

.png?width=1081&height=173&name=Forrester%201%20(1).png)

“Reference customers have repeatedly mentioned the great customer service they receive along with the support for their custom requirements, facilitating time to value. OvalEdge fits well with organizations prioritizing business user empowerment within their data governance strategy.”

.png?width=1081&height=241&name=KC%20-%20Logo%201%20(1).png)

“Reference customers have repeatedly mentioned the great customer service they receive along with the support for their custom requirements, facilitating time to value. OvalEdge fits well with organizations prioritizing business user empowerment within their data governance strategy.”

Gartner, Magic Quadrant for Data and Analytics Governance Platforms, January 2025

Gartner does not endorse any vendor, product or service depicted in its research publications, and does not advise technology users to select only those vendors with the highest ratings or other designation. Gartner research publications consist of the opinions of Gartner’s research organization and should not be construed as statements of fact. Gartner disclaims all warranties, expressed or implied, with respect to this research, including any warranties of merchantability or fitness for a particular purpose.

GARTNER and MAGIC QUADRANT are registered trademarks of Gartner, Inc. and/or its affiliates in the U.S. and internationally and are used herein with permission. All rights reserved.