Table of Contents

Data Hub vs Data Warehouse: Roles and Use Cases

A data hub aligns operational systems through real-time standardization, while a data warehouse transforms that aligned data into curated historical insight. Their coordinated flow supports consistent operations, dependable analytics, and a complete view of how the business evolves. When governed together, the hub and warehouse form a connected architecture that strengthens decisions across both operational and analytical workflows.

Data is growing faster, flowing through more systems, and changing shape far more quickly than traditional architectures were designed to handle. What once felt like a straightforward decision about where to store information has become a landscape of specialized platforms, each addressing different points in the data lifecycle.

As organizations scale, the number of technologies involved multiplies. New systems promise speed or flexibility, legacy systems hold critical records, and integration patterns grow increasingly complex.

The result is an ecosystem where data moves constantly, but not always consistently.

Teams now navigate a mix of architectures with overlapping responsibilities. Data hubs focus on real-time alignment across operational systems.

Data warehouses curate historical information for analytics. Data lakes collect raw, large-scale data for future use. Lakehouses attempt to unify analytical and operational needs. These options expand what is possible, yet they also make it harder to determine how each layer should work together.

This leads to the central question: which architectural approach fits the way your organization operates and analyzes data? The answer rarely comes from choosing one system over another.

Instead, it comes from understanding how these components interact, where they differ, and how they support one another across the data lifecycle.

This blog examines the roles of data hubs and data warehouses, how data flows between them, how they depend on shared governance and standardization, and why both have become essential in modern, interconnected architectures.

What is a data hub?

A data hub provides a centralized way to integrate, standardize, and distribute operational data across systems. It captures updates in real time, harmonizes shared entities, and ensures that applications consume consistent and reliable information.

A data hub improves interoperability across the ecosystem by managing ingestion, transformation, governance, and distribution as coordinated services that keep operational data aligned.

A data hub provides a centralized way to integrate, standardize, and distribute operational data across systems. It connects diverse applications, captures updates in real time, and ensures that shared entities remain consistent as data moves across the ecosystem. A data hub improves interoperability by managing ingestion, transformation, governance, and distribution as coordinated services that keep operational data aligned.

It also functions as an integration and distribution layer that supports controlled data sharing between applications. Some data hubs include master data management capabilities to harmonize core entities, while others focus on real-time integration, event distribution, or metadata coordination, depending on an organization’s operational needs.

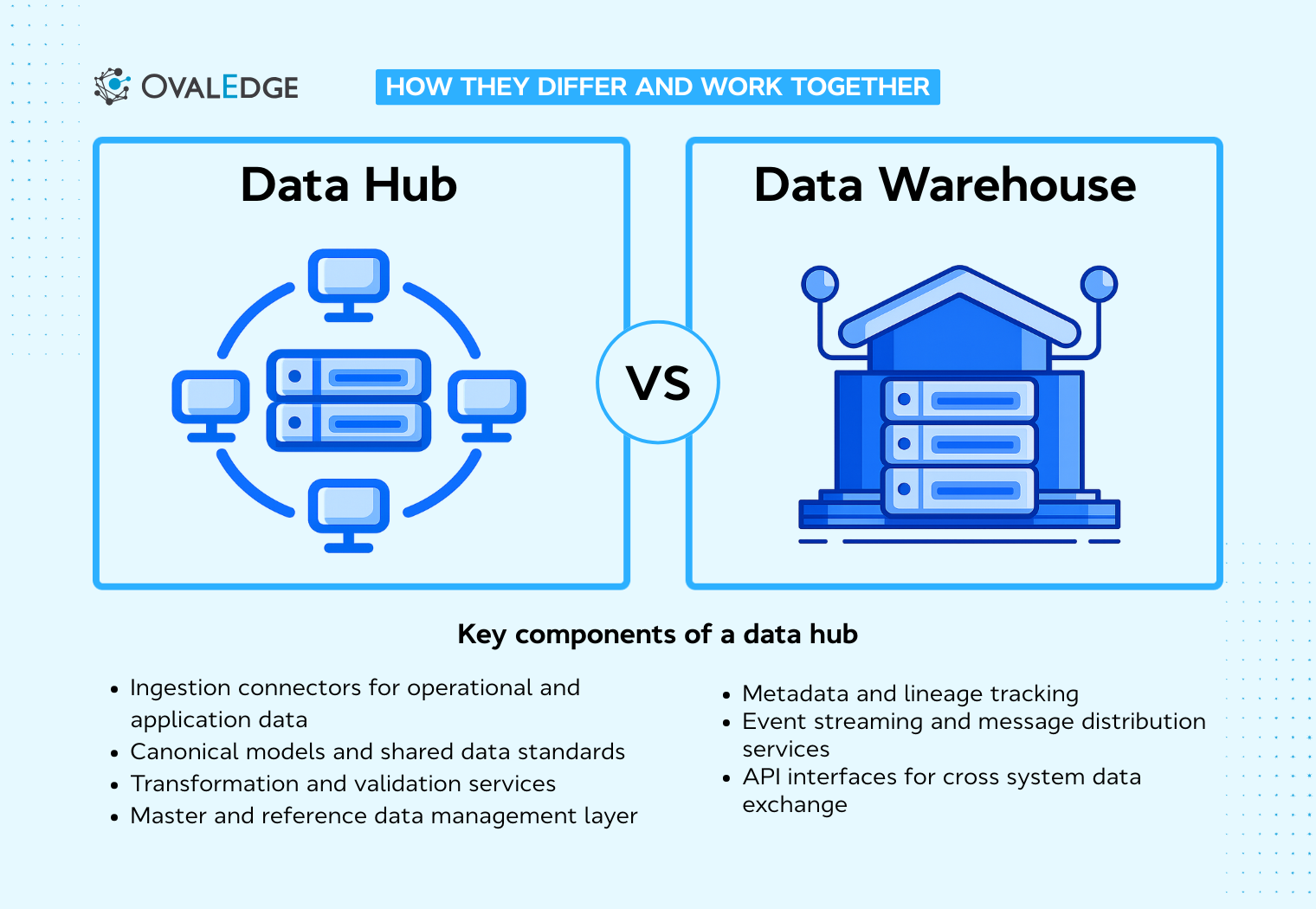

Key components of a data hub

A data hub functions as the coordination layer that keeps operational systems aligned, but its effectiveness depends on the architectural components that manage ingestion, standardization, governance, and distribution.

These components work together to ensure that data entering the ecosystem is consistent, trusted, and ready for real-time use across applications.

1. Ingestion connectors for operational and application data

A data hub depends on a wide range of ingestion connectors that pull data from transactional applications, APIs, event streams, and partner systems. This variety is important because enterprise environments rarely operate on a single data technology.

When customer interactions are happening across CRM applications, e-commerce platforms, support systems, and mobile apps, the hub must stay aligned with all of them in near real time.

The real value of ingestion connectors is not the connection itself but the ability to maintain synchronized operational states. When orders are updated, customer profiles change, or products are modified, the hub captures those updates as close to the source as possible so downstream processes do not rely on stale data.

Operational consistency across systems is one of the core enablers of automation and process reliability, and ingestion connectors are the entry point that makes this consistency possible.

This capability becomes especially important in organizations moving toward event-driven architectures, where applications need immediate awareness of changes happening across the ecosystem.

According to a 2025 Gartner Report on Data Integration Maturity Model, metadata activation is essential to achieving advanced integration maturity, which reinforces how connectors, combined with lineage and context, support coordinated data flow across systems.

Without connectors that support continuous synchronization, operational systems drift apart, leading to duplicate records, mismatched attributes, and an overall breakdown in data trust.

2. Canonical models and shared data standards

Canonical models play an important role in data hubs that manage shared business entities, particularly in MDM-style or standardization-driven implementations.

They define how the organization represents core entities such as customers, products, suppliers, or locations, creating a unified structure and vocabulary for systems that rely on common data.

When a hub operates in this mode, canonical standards reduce integration complexity and support consistent validation, enrichment, and operational workflows.

Not all data hubs use canonical models in the same way. Integration hubs, streaming hubs, distribution hubs, and metadata hubs often focus on fast movement and controlled sharing rather than enforcing a single enterprise model.

In these architectures, the hub acts as a coordination layer instead of a modeling authority.

When canonical standards are applied, they also strengthen downstream analytics. Standardized data entering a warehouse requires less reconciliation and produces more reliable insights.

However, it is important to distinguish that canonical modeling is an optional capability, shaped by the type of hub an organization implements, not a mandatory requirement for all hub architectures.

3. Transformation and validation services

Transformation and validation services are central to how a data hub improves operational consistency.

As data enters the hub from applications, APIs, and event streams, it passes through a set of rules that detect formatting issues, missing attributes, conflicting identifiers, and structural irregularities.

These checks matter because operational systems often capture data at different levels of completeness or use inconsistent naming conventions. Without a mechanism to correct these discrepancies, every downstream system inherits the problems and propagates them further.

Transformation and validation services also prepare data for both operational and analytical use. While the primary role of the hub is operational integration, validated data reduces the burden on data pipelines that feed the warehouse.

Early stage validation significantly decreases rework and reconciliation in analytical environments. This means fewer corrections later and a smoother handoff to systems that depend on accurate historical records.

4. Master and reference data management layer

When customer, product, or supplier information appears in multiple systems, it often carries conflicting details. A CRM might store a customer under one spelling, a billing system uses another, and a support platform captures yet another variation.

The hub’s MDM layer harmonizes these differences by identifying duplicates, reconciling variances, and establishing a single recognized form that becomes the operational source of truth.

This consolidation is especially valuable in large organizations where processes span multiple applications.

Effective management of master data reduces time spent on operational problem-solving and improves the reliability of business processes.

By creating a unified view of key entities, the hub reduces confusion across teams, supports consistent customer experiences, and strengthens downstream analytics.

Reference data such as currencies, product categories, or location codes also benefit from central management. When each system uses the same controlled vocabulary, integrations become less fragile, and governance teams gain more effective oversight.

5. Metadata and lineage tracking

Metadata and lineage tracking give organizations the ability to see where data originated, how it has been transformed, and which systems depend on it. This visibility becomes essential in environments where dozens of applications exchange data every day.

When teams cannot trace the path of a record, even small inconsistencies can turn into major operational issues.

Data lineage is a cornerstone of governance because it allows teams to identify the root cause of data quality problems.

If an order record arrives in the wrong format or a customer attribute has unexpected values, lineage helps pinpoint whether the issue came from a source system, a transformation step, or an integration rule.

Without this transparency, troubleshooting becomes slow and reactive, and errors continue to propagate across the ecosystem.

This is where purpose-built lineage tooling becomes a real advantage. Platforms like OvalEdge automate lineage creation down to the column level, mapping data flow across SQL, BI, ETL, and streaming systems.

This level of detail helps data teams assess the impact of changes before they occur, gives business users clarity into how data is used, and supports compliance teams with traceability back to the point of origin.

Metadata in the hub also plays a crucial role in supporting the warehouse. When analytical teams know which operational systems feed a dataset and how the structure changes over time, they can design more reliable pipelines and avoid misinterpretation.

6. Event streaming and message distribution services

Event streaming capabilities allow the data hub to distribute near-real-time updates across the organization.

When important changes occur in operational systems, such as a new order being placed or a change to a customer profile, the hub can immediately notify the systems that depend on that information.

This pattern reduces the lag that often occurs with batch-based integrations and supports faster operational decision-making.

Event-driven integration is especially valuable in industries where customer experiences rely on immediate alignment between systems.

A change recorded in a customer service application should be visible to fulfillment systems and marketing platforms without delay. Without event streaming, teams often struggle with duplicated records, outdated information, and inconsistent customer interactions.

7. API interfaces for cross-system data exchange

API interfaces are a fundamental part of the hub because they provide controlled access to standardized data across operational systems. APIs allow applications to retrieve or submit data consistently, without relying on point-to-point integrations that are difficult to maintain.

By exposing centralized access points, the hub helps enforce governance policies and ensures that only validated information is shared.

Not every architecture requires a formal data hub. Many organizations load operational data directly into a warehouse, especially when synchronization demands are low.

A hub becomes valuable when multiple operational systems must stay aligned, when master data needs consistent standardization, or when real-time distribution is essential to downstream workflows.

When systems exchange data through the hub’s APIs, it becomes easier to monitor usage, maintain data quality, and prevent unauthorized access.

APIs also enable scalable integration because new applications can connect without refactoring existing pipelines. With dependable API delivery, operational applications behave more predictably, and analytical environments receive cleaner, more consistent inputs.

What is a data warehouse?

A data warehouse stores curated historical data that supports reliable reporting, trend analysis, and analytics. It organizes information from multiple systems into structured models that present consistent metrics and meaningful insights.

A data warehouse preserves changes over time, unifies definitions, and delivers a stable foundation for dashboards, forecasting, and decision making. It serves as the analytical layer that transforms raw data into trusted business intelligence.

Key components of a data warehouse

A data warehouse delivers value by organizing large volumes of historical information into structures that support reliable reporting and analytics.

To do this effectively, it relies on a set of architectural components that manage ingestion, transformation, storage, and access. These elements work together to turn raw operational data into curated, trustworthy datasets that analysts and decision makers can depend on.

1. Data ingestion pipelines for structured and semi-structured sources

Data ingestion pipelines serve as the entry point for everything the warehouse analyzes. These pipelines pull information from internal applications, cloud services, partner systems, and operational platforms.

Since organizations often store data in different formats or capture it at different stages of completeness, ingestion is not only about transferring records but also about interpreting them correctly.

A common challenge is that source systems rarely speak the same language. Customer data may follow one schema in a CRM and another in a billing system. Semi-structured sources like logs or JSON payloads introduce even more variability.

High-quality pipelines must parse, normalize, and map these differences into a format the warehouse can use. This foundational step is why many analysts point to ingestion as a critical part of data warehouse reliability.

Bad ingestion routines lead to downstream reporting errors, and those errors can take significant effort to trace back without proper structure.

2. Staging and transformation layers

Staging layers act as the buffer zone between raw incoming data and curated analytical datasets. When data lands in staging, it has not yet been shaped or validated for analysis.

This separation gives teams a safer environment to inspect, clean, and correct data without affecting production workloads.

This step is essential because many operational systems are not designed with analytical quality in mind. Missing values, inconsistent codes, or outdated classifications appear frequently. If these issues flow directly into analytical tables, dashboards become unreliable.

A staging layer protects the warehouse from this risk by acting as a controlled environment where data engineers can standardize structures, reconcile inconsistencies, and apply business logic.

Transformation layers then take this structured but raw data and align it with the analytical requirements of the organization. This often includes joining related datasets, deriving new fields, and converting operational records into formats optimized for trend analysis.

3. Dimensional models and analytical schemas

Dimensional models and analytical schemas organize data into facts and dimensions so analysts can explore business questions without navigating complex transactional structures.

This design is one of the reasons warehouses excel at reporting and trend analysis. Transactional systems record events as they happen, but these systems are not optimized for understanding patterns over time.

Dimensional models reshape that raw data into formats that directly support analytical thinking.

Operational systems often capture each transaction in detail, but analysts need aggregated views, slicable dimensions, and consistent definitions for concepts like revenue, order value, or customer segments.

Dimensional modeling provides this structure by organizing data around business entities.

4. Centralized storage for curated historical data

Centralized storage in a data warehouse gives organizations the ability to analyze trends, measure performance over time, and compare outcomes across periods. Operating systems usually overwrite or modify records as processes unfold.

Customer addresses change, order statuses update, and inventory counts fluctuate. The warehouse preserves these changes as historical snapshots instead of replacing them. This approach creates a long-term view of business activity that operational systems are not designed to support.

Having curated historical data is essential for forecasting, anomaly detection, and long-range strategic planning.

Predictive analytics relies heavily on clean historical datasets. Without a structured archive of past events, organizations struggle to build accurate models or understand why certain patterns emerge.

Centralized storage also reduces fragmentation across teams. When marketing, finance, operations, and product teams each build their own datasets, inconsistencies quickly appear.

The warehouse eliminates this duplication by serving as a single location for curated historical information.

Analysts no longer need to reconcile conflicting versions of the same metric, and decision makers gain confidence that reports originate from a trusted source.

5. Metadata, cataloging, and lineage management

Metadata, cataloging, and lineage capabilities give the warehouse structure, traceability, and meaning.

Analysts depend on these features because data in a warehouse often comes from dozens of operational sources, each shaped through multiple transformations.

Without clear metadata, teams waste time trying to understand what a field represents, how it was calculated, or whether it is trustworthy.

Cataloging solves that problem by giving users a central place to search for datasets, examine definitions, and review usage guidelines. This eliminates guesswork and reduces reliance on tribal knowledge, which is a common bottleneck in large organizations.

Tools that unify metadata from every source and enrich it with context make this even more effective. Platforms like OvalEdge provide a connected catalog that captures active and extended metadata, automates classification, and organizes business definitions so users can interpret data quickly and confidently.

Lineage adds another layer of visibility by showing how data moves from ingestion to final analytical tables. It reveals which upstream systems contribute to a dataset, what rules were applied during transformation, and how often the data is refreshed.

6. Data quality and validation frameworks

Data quality frameworks ensure that the warehouse delivers accurate and complete information to business users. Warehouses handle curated historical data, and small defects in upstream pipelines can grow into significant errors once aggregated over time.

Validation frameworks catch issues early by applying rules that check for missing attributes, invalid structures, inconsistent values, and outlier records that could distort analysis.

Analytical environments suffer when data quality is treated as an afterthought.

|

For example, if product categories are mislabeled in one system and those inconsistencies flow into the warehouse, performance metrics become unreliable. |

These errors can lead to flawed decisions or costly rework as teams attempt to reconcile discrepancies manually.

According to Forrester’s Data Culture and Literacy Survey, 2023, one-quarter of global data and analytics employees who cite poor data quality as a barrier to data literacy estimate losses exceeding $5 million annually, with 7% reporting losses above $25 million.

Data quality practices directly influence the success of analytics initiatives because decision makers rely on the stability of underlying metrics.

7. Semantic layer for consistent metrics and definitions

The semantic layer ensures that every dashboard and analytical tool interprets metrics in the same way. Without a semantic layer, different teams may calculate key indicators such as revenue, churn rate, or customer lifetime value using their own formulas.

This fragmentation leads to conflicting reports and misaligned decision-making. A semantic layer standardizes definitions across the organization and presents a unified logic layer that tools can connect to without reinventing calculations.

This component is frequently emphasized in enterprise analytics because it solves one of the most persistent pain points in reporting: metric inconsistency.

When a company’s finance team and marketing team present different values for the same metric, leadership loses confidence in the analytics program. A shared semantic layer eliminates these discrepancies by enforcing common business rules.

It also strengthens the relationship between the warehouse and the hub. The warehouse translates operational data into analytical structures, and the semantic layer ensures that analysts interpret those structures uniformly.

8. Query processing and performance optimization engines

Query processing engines are the engines that make a data warehouse capable of handling analytical workloads at scale.

Warehouses must support complex aggregations across millions of records, join data from multiple subject areas, and return results quickly enough for analysts to explore insights without interruptions.

This requirement becomes more pressing as organizations accumulate larger historical datasets and expand the number of users relying on dashboards and analytical applications.

Performance optimization techniques such as columnar storage, query pruning, compression, and distributed processing help warehouses maintain responsiveness even as data volumes grow.

These capabilities distinguish a warehouse from an operational data hub. The hub focuses on real-time distribution of standardized data, while the warehouse is built to answer questions that require scanning or aggregating historical records.

Modern cloud warehouses also adapt their compute resources dynamically. This flexibility addresses a common pain point in traditional systems where heavy analytical queries could slow down performance for everyone.

9. Data marts for subject-specific analytics

Data marts provide curated subsets of the warehouse organized around the needs of specific business teams.

They allow finance, sales, operations, supply chain, and marketing groups to access data structured around their roles without navigating the full complexity of enterprise-wide models.

This separation improves performance and usability because each domain receives only the datasets and metrics most relevant to its work.

A well-designed data mart reflects the processes and vocabulary of the business unit it supports.

Data marts also help organizations maintain governance. Instead of each team building its own shadow datasets, the warehouse provides controlled access to standardized, vetted information.

This structure supports a consistent analytical foundation across the company and prevents conflicting reports from different departments.

10. Integration points for BI and reporting tools

Business intelligence tools, machine learning platforms, and visualization applications rely on the warehouse as their central analytical source. Analysts and data scientists use these tools to explore trends, evaluate performance, and model future scenarios.

Without reliable integration points, teams would be forced to pull data manually or build fragile connections to operational systems, which introduces risk and inconsistency.

The warehouse simplifies this by offering stable interfaces for BI platforms such as Tableau, Power BI, and Looker. These tools expect curated, structured data that reflects business logic, something operational systems are not designed to provide.

By connecting directly to the warehouse, reporting tools benefit from consistent refresh schedules, well-defined schemas, and standardized metrics.

Integration points also extend to advanced analytics. Machine learning workloads often require large volumes of historical data to identify patterns. Warehouses store this data in a format suitable for model training and experimentation.

Differences between a data hub and a data warehouse

A data hub and a data warehouse often appear similar at a distance because both manage and distribute data across the organization, but the roles they play in a modern architecture are fundamentally different.

One supports real-time operational processes, while the other enables structured analytical insight. Recognizing how their responsibilities diverge helps clarify why organizations need both layers and how each contributes to a reliable, scalable data ecosystem.

|

Aspect |

Data Hub |

Data Warehouse |

|

Primary Purpose |

Synchronizes and standardizes operational data across systems |

Stores curated historical data for analytics and reporting |

|

Core Function |

Real-time integration and distribution |

Long-term analysis and metric generation |

|

Data Flow |

Many-to-many operational data exchange |

One-way ingestion into analytical models |

|

Data Type Focus |

Operational, current-state data |

Historical, time-variant data |

|

Latency |

Near real-time |

Supports batch, micro-batch, or real-time ingestion depending on architecture |

|

Modeling Approach |

Flexible canonical models |

Structured dimensional schemas |

|

Governance Role |

Enforces standardization and entity harmonization upstream |

Ensures analytical consistency and metric reliability downstream |

|

Data Quality Focus |

Cleansing, standardizing, mastering entities |

Validating, modeling, and ensuring analytical accuracy |

|

Integration Pattern |

Event-driven, API-based, message distribution |

ETL and ELT pipelines into analytical layers |

|

Interaction with Systems |

Connects operational applications |

Connects BI tools, dashboards, and ML platforms |

|

Scalability Focus |

Scaling distribution and operational workloads |

Scaling analytical queries and storage |

|

Storage Characteristics |

Stores operations/master data needed for synchronization but does not serve as the primary repository |

Centralized store for years of curated data |

|

User Personas |

Application owners, integration teams, operations |

Analysts, data scientists, BI teams |

|

Typical Outputs |

Cleaned and standardized operational entities |

Reports, KPIs, dashboards, predictive features |

|

Dependency Relationship |

Supplies standardized data to the warehouse |

Produces insights that can be fed back into the hub |

|

Strengths |

Data consistency across systems, real-time updates |

Deep insights, historical trends, unified analytics |

|

Limitations |

Not suited for complex analytics |

Not suited for real-time operational distribution |

|

Best For |

Operational alignment, mastering, and cross-system synchronization |

Enterprise reporting, forecasting, and strategic analysis |

How data flows between operational systems, data hubs, and data warehouses

Understanding how data moves across operational, integration, and analytical layers clarifies why modern architectures rely on both a data hub and a data warehouse.

Each system handles a different part of the lifecycle, and the quality of downstream insights depends on how well these layers coordinate.

Forward data flow

In most organizations, data travels through a predictable sequence:

Operational systems → Data hub → Data warehouse → BI and analytics tools

This sequence reflects the functional separation of operational consistency and analytical reliability.

Operational systems such as CRM platforms, billing engines, e-commerce sites, ERP modules, and support applications generate real-time business events. These systems are optimized for transactions, not for sharing standardized information.

As a result, similar entities often appear with different structures, identifiers, or levels of completeness across applications.

The data hub acts as the operational alignment layer. It ingests updates from each source, applies validation rules, resolves duplicates, harmonizes entity formats, and distributes consistent records to connected systems.

This prevents downstream platforms from inheriting conflicting or incomplete values and reduces the integration overhead that arises when applications communicate directly.

The data warehouse receives this standardized flow and transforms it into curated historical models. It organizes data into analytical schemas, preserves old and new values for time-based analysis, and produces datasets that support reporting, forecasting, and advanced analytics.

This shift from current-state operational data to time-variant analytical structures is what enables long-term insights.

BI tools and analytical platforms rely on the warehouse because it delivers stable, governed, and consistent data that reflects shared business definitions. These tools expect structured relationships, predictable refresh cycles, and coherent logic that transactional systems cannot provide.

Feedback loop from analytics to operations

In mature architectures, insights do not remain confined to analytics teams. They return to operational systems through the hub.

Data warehouse → Data hub → Operational systems

The warehouse generates intelligence such as customer segments, demand projections, lead scores, fraud indicators, or operational KPIs. These outputs become valuable only when reintroduced into frontline applications where decisions occur.

The hub enables this by distributing enriched data back to CRM systems, marketing automation tools, support platforms, and fulfillment applications. Its governance rules ensure the right systems receive the right insights in a consistent and controlled format. This closes the loop between analysis and action.

Why this architecture works

The layered flow solves structural problems that arise when organizations treat operational and analytical systems as isolated environments.

Operational systems stay synchronized because the hub maintains a consistent, real-time representation of shared business entities.

Analytical environments receive standardized inputs, reducing rework and eliminating the inconsistencies that lead to conflicting KPIs.

Historical reporting remains accurate because the warehouse preserves changes rather than overwriting them, giving analysts a reliable basis for trend analysis and forecasting.

Operational decisions improve because insights generated in the warehouse re-enter the ecosystem and guide processes across marketing, sales, finance, logistics, and support.

The hub maintains operational consistency. The warehouse maintains analytical consistency.

Together, they create a closed-loop lifecycle where real-time operations feed analytics, and analytics enhance real-time operations. This interdependence is why modern data architectures increasingly rely on both systems rather than choosing one over the other.

How data hubs and data warehouses depend on each other

A data hub and a data warehouse serve different purposes, yet neither operates effectively in isolation. The hub maintains operational consistency, while the warehouse delivers long-term analytical insight, and their value grows when they reinforce one another.

1. Shared governance and unified data definitions

Shared governance and unified data definitions are essential because operational and analytical systems cannot function independently of each other.

When a customer, product, or order is defined differently across the hub and the warehouse, every downstream process becomes vulnerable to inconsistency.

Operational applications may be using one version of a customer profile while analytical teams evaluate performance based on a completely different structure or naming convention.

This disconnect affects reporting accuracy, billing processes, and even customer experience.

The hub and warehouse succeed only when governance spans both layers. A unified definition ensures that data entering the hub is standardized before distribution and that the warehouse receives inputs aligned with the same business meaning.

This upstream consistency reduces the amount of corrective work analysts must perform before building reports or models.

2. Coordinated metadata, lineage, and documentation

Coordinating metadata and lineage across the hub and warehouse creates a continuous view of how data enters, moves, transforms, and is ultimately consumed in reports or analytical applications. Without this shared visibility, teams struggle to track errors back to their source.

A data quality issue discovered in the warehouse may originate in an upstream application or during a hub-level standardization process, but without unified lineage, identifying the cause becomes slow and frustrating.

By extending governance frameworks across both systems, organizations gain clarity into every transformation step. Analysts can trace a field’s history from ingestion in the hub to its curated form in the warehouse.

Engineers can identify which integration pipelines or transformation jobs need attention when anomalies appear.

Metadata also provides context that reduces risk during schema changes. Operational systems frequently evolve, and when metadata about those changes is shared across the hub and warehouse, downstream analytics do not break unexpectedly.

Coordinated documentation ensures that every team understands where datasets came from, what rules were applied, and how reliable the final outputs are.

This alignment is one of the clearest areas where the hub and warehouse depend on each other. The hub ensures data enters the ecosystem in a standardized and transparent way.

The warehouse relies on that same metadata and lineage to produce trustworthy historical analysis. When both systems operate with coordinated governance, data flows become predictable, and analytics deliver higher confidence.

3. Upstream standardization that supports analytical accuracy

Upstream standardization in the data hub sets the foundation for reliable analytics in the warehouse.

When operational systems feed inconsistent, incomplete, or structurally different records into the architecture, the warehouse inherits those issues unless they are corrected earlier in the flow.

The hub solves this by applying validation rules, harmonizing entity formats, and resolving discrepancies before the data ever reaches analytical pipelines.

This early intervention matters because analytical processes amplify small errors. A missing customer attribute in an upstream system might seem minor, but once aggregated into performance dashboards, machine learning models, or financial reports, it can distort metrics across the entire organization.

Upstream standardization is one of the strongest predictors of downstream analytical reliability. When the hub enforces consistent formatting and shared definitions, the warehouse receives cleaner inputs, transformations become more predictable, and analysts spend less time correcting data manually.

Standardization is also essential for organizations with rapidly growing operational footprints. As new applications are introduced or legacy systems evolve, the hub absorbs changes without forcing the warehouse to reengineer its structures.

This separation of concerns keeps analytical environments stable even as operational landscapes shift.

4. Downstream enrichment that returns insights to operational systems

Downstream enrichment closes the loop between analytics and operations. After the warehouse generates insights such as customer segments, propensity scores, or predicted demand patterns, the hub distributes those insights back into operational systems where they can drive action.

This connection transforms analytics from a reporting function into an operational advantage.

|

For example, segmentation models generated in the warehouse have little value unless they reach marketing platforms or customer support tools. |

The hub makes this possible by providing a governed path for enriched data to flow back into applications that use it in real time.

This enrichment capability also highlights the difference between a data hub and a data warehouse.

The warehouse identifies trends by aggregating historical information. The hub ensures those insights become operationally useful by distributing them to the systems that act on them.

Without the hub, enriched insights remain confined to dashboards. With it, organizations can embed intelligence across business processes.

5. Quality and mastering dependencies across both layers

Quality and mastering dependencies illustrate how tightly connected the hub and warehouse must be to maintain accuracy across the entire data ecosystem.

The hub manages master and reference data by reconciling duplicates, standardizing attributes, and ensuring that key entities such as customers or products appear in consistent form across operational systems. This mastered data becomes the foundation of warehouse reporting.

However, the relationship is not one-way. Analytical processes in the warehouse often reveal deeper quality issues that are not visible in operational workflows.

|

For instance, when analysts compare historical performance across regions or customer groups, unexpected anomalies may indicate upstream inconsistencies that the hub needs to correct. |

These feedback loops help organizations continuously refine their governance practices.

Enterprises with strong master data practices see fewer discrepancies in reporting because both operational and analytical environments rely on the same trusted entities.

When the hub curates operational data and the warehouse exposes quality gaps, both layers reinforce a cycle of improvement that produces more accurate insights and more stable operational processes.

This interplay is one of the strongest arguments for viewing the hub and warehouse not as competing architectures but as complementary components of a unified data strategy.

6. Synchronization of change events and historical records

Synchronization between operational change events in the hub and the historical datasets in the warehouse is essential for maintaining an accurate record of how the business evolves over time.

The hub captures updates as they occur across operational systems. These events document the real-time state of the organization, whether it is a customer modifying contact details or a product moving through stages of fulfillment.

For the warehouse to build reliable historical timelines, it must receive these changes in a consistent, structured form. If the warehouse does not capture changes at the same pace or with the same granularity as the hub, historical records become incomplete or misleading.

This disconnect can affect everything from financial reporting to customer analytics.

|

For example, when operational updates are delayed or transformed inconsistently, analysts may see sudden spikes or drops that do not reflect actual business activity. |

Synchronized flow between hub and warehouse creates a continuous data lifecycle. The hub captures the present, and the warehouse preserves the past.

This coordination ensures that trend analysis, forecasting, and performance reporting are built on a stable and sequential record of events. Without this alignment, the warehouse risks losing the context that gives historical data meaning.

7. Consistent identifiers and reference data alignment

Consistent identifiers and aligned reference data are among the most important dependencies shared by the hub and warehouse. Both systems operate on representations of the same business entities.

If they do not use the same identifiers, the relationship between operational events and historical records breaks down. This leads to fragmented reporting, duplicate entities, inaccurate analytics, and operational systems that behave inconsistently.

|

For instance, if a customer is assigned different IDs across applications and those inconsistencies flow into the hub and warehouse, analysts cannot accurately match operational transactions with historical trends. |

Fragmented identifiers also create problems in downstream enrichment. Insights generated in the warehouse cannot be reliably pushed back to operational systems unless the hub recognizes the same entity.

Aligned reference data is equally important. Reference data includes classifications, categories, region codes, product families, and other controlled vocabularies.

When different systems use conflicting reference values, operational processes become unpredictable. Reporting also becomes unreliable because metrics break when categories are not applied uniformly.

Conclusion

Data hubs and data warehouses are not opposing strategies. They are complementary parts of a unified data architecture. A hub strengthens operational consistency, while a warehouse strengthens analytical clarity.

Their value grows when they work together rather than in isolation. Modern data ecosystems depend on this balance because no single system can satisfy both real-time operational needs and long-term analytical demands.

A hub shapes raw, fast-moving data into standardized, trusted inputs. A warehouse transforms that trusted data into curated insight. Each relies on the other to deliver complete and reliable intelligence across the organization.

When these components are aligned, teams gain accurate operational workflows, stronger reporting, and better decision-making at every level.

Key points that reinforce this connection:

-

Upstream standardization from the hub reduces rework and improves analytical accuracy in the warehouse.

-

Warehouse insights feed back into operational systems through the hub, strengthening real-time decisions.

-

Shared governance frameworks keep definitions, identifiers, and quality rules consistent across all environments.

This combined approach offers a clearer path forward for teams designing scalable, dependable, and future-ready data architectures.

As organizations work to align their hub and warehouse strategies, the next challenge is operationalizing this balance with reliable governance, clear metadata, and automated lineage.

Most teams struggle not because the architecture is flawed, but because they lack a unified platform that makes their data trustworthy, discoverable, and ready for both operational and analytical use.

This is where the right tooling becomes a multiplier for everything the architecture is designed to achieve.

If your team is building toward a modern, connected data ecosystem, OvalEdge can help you get there faster. Its AI-driven data catalog, automated lineage, quality workflows, and governance capabilities provide the foundation that hubs and warehouses depend on to stay consistent and scalable.

See how it works in real environments and explore what unified governance can unlock for your organization.

Book a demo with OvalEdge.

FAQs

1. Does a data hub reduce warehouse storage requirements?

Yes. The hub filters, standardizes, and resolves duplicates before data enters the warehouse. This decreases redundant storage and simplifies warehouse modeling.

2. How does a hub support warehouse enrichment workflows?

The hub distributes insights generated in the warehouse, such as churn scores or segmentation labels, back into operational systems. This enables closed-loop analytics and intelligence-driven operations.

3. What architectural risks occur when a warehouse bypasses the data hub?

Bypassing the hub introduces inconsistent definitions, duplicated entities, incompatible formats, and lineage gaps. This leads to unreliable analytics and misaligned operational behavior.

4. How do governance rules differ between a data hub and a data warehouse?

A data hub enforces operational standardization and entity harmonization. A data warehouse enforces metric consistency, analytical modeling rules, and controlled historical storage. Both require unified governance for accuracy across the ecosystem.

5. How does latency differ between a data hub and a data warehouse?

A data hub supports low-latency, near-real-time data movement across operational systems. A data warehouse operates on scheduled or batch ingestion optimized for analytical queries rather than immediate propagation.

6. Can a data hub improve data warehouse load times?

Yes. The hub standardizes and validates data upstream, reducing transformation work during warehouse loading. This decreases load failures and shortens ingestion windows.

Deep-dive whitepapers on modern data governance and agentic analytics

OvalEdge recognized as a leader in data governance solutions

.png?width=1081&height=173&name=Forrester%201%20(1).png)

“Reference customers have repeatedly mentioned the great customer service they receive along with the support for their custom requirements, facilitating time to value. OvalEdge fits well with organizations prioritizing business user empowerment within their data governance strategy.”

.png?width=1081&height=241&name=KC%20-%20Logo%201%20(1).png)

“Reference customers have repeatedly mentioned the great customer service they receive along with the support for their custom requirements, facilitating time to value. OvalEdge fits well with organizations prioritizing business user empowerment within their data governance strategy.”

Gartner, Magic Quadrant for Data and Analytics Governance Platforms, January 2025

Gartner does not endorse any vendor, product or service depicted in its research publications, and does not advise technology users to select only those vendors with the highest ratings or other designation. Gartner research publications consist of the opinions of Gartner’s research organization and should not be construed as statements of fact. Gartner disclaims all warranties, expressed or implied, with respect to this research, including any warranties of merchantability or fitness for a particular purpose.

GARTNER and MAGIC QUADRANT are registered trademarks of Gartner, Inc. and/or its affiliates in the U.S. and internationally and are used herein with permission. All rights reserved.